Running ComfyUI in the Cloud: Runpod vs. RunningHub — A Practical Guide

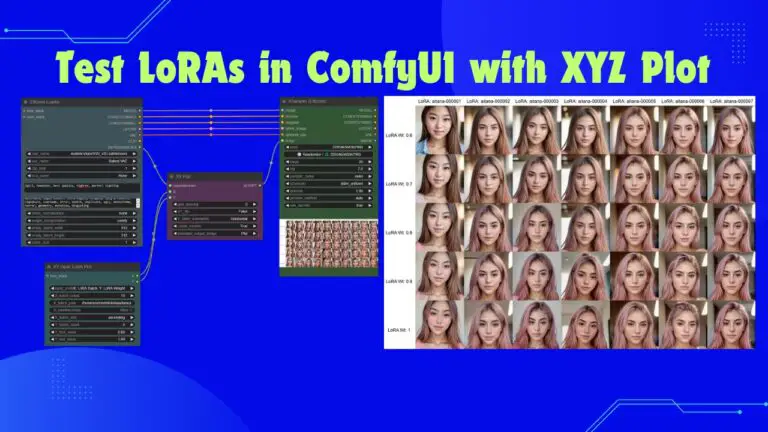

Cloud-based ComfyUI lets you build, test, and iterate on AI image/video workflows without wrestling with local installs or being bottlenecked by your GPU.

In this article, I compare two platforms I actively use—Runpod and RunningHub—to show how each tackles setup, models, nodes, performance, and pricing. If you’re brand-new, you’ll see why RunningHub feels like flipping a switch. If you’re advanced, you’ll learn where Runpod’s full control shines and where RunningHub’s ready-to-run environment saves serious time.

Video Tutorial:

Gain exclusive access to advanced ComfyUI workflows and resources by joining our community now!

Why Run ComfyUI in the Cloud?

Whether you’re just starting out with ComfyUI or you’re a seasoned pro, cloud-based solutions bring huge advantages that improve both workflow and resource management.

Beginner Advantages

For newcomers, the cloud offers a smooth, frictionless introduction to ComfyUI. With RunningHub, everything is pre-installed and ready to go, meaning you don’t need to worry about downloading models or setting up complex environments.

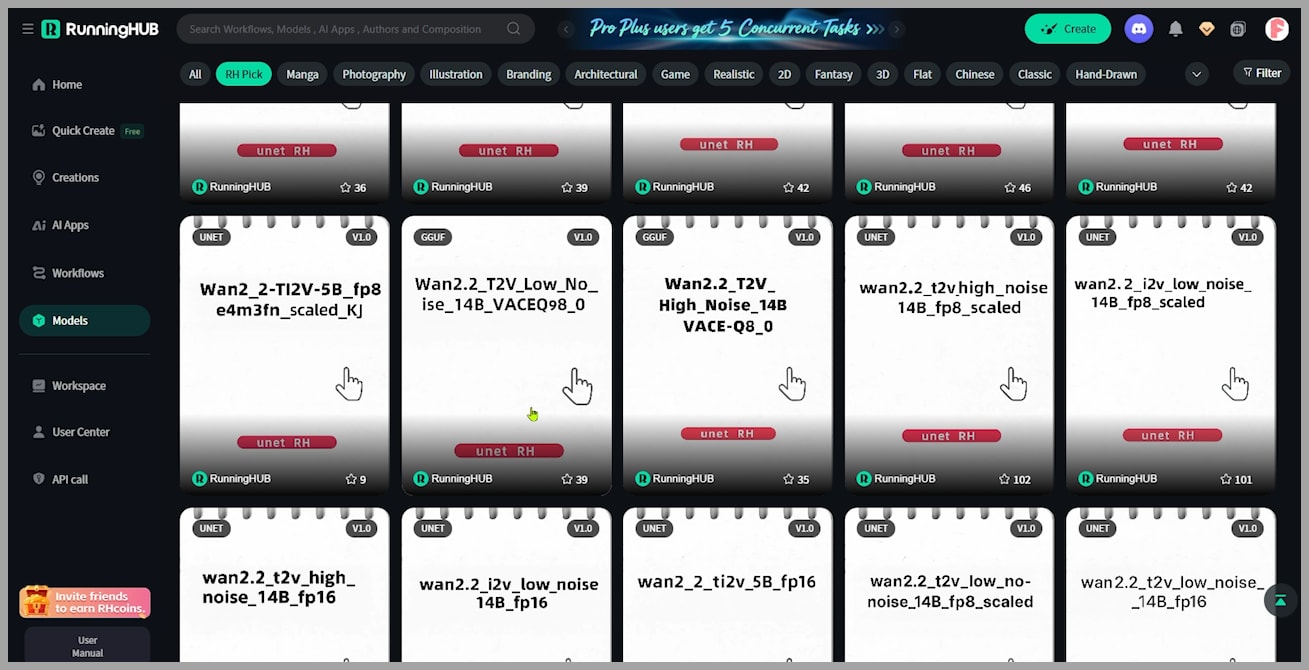

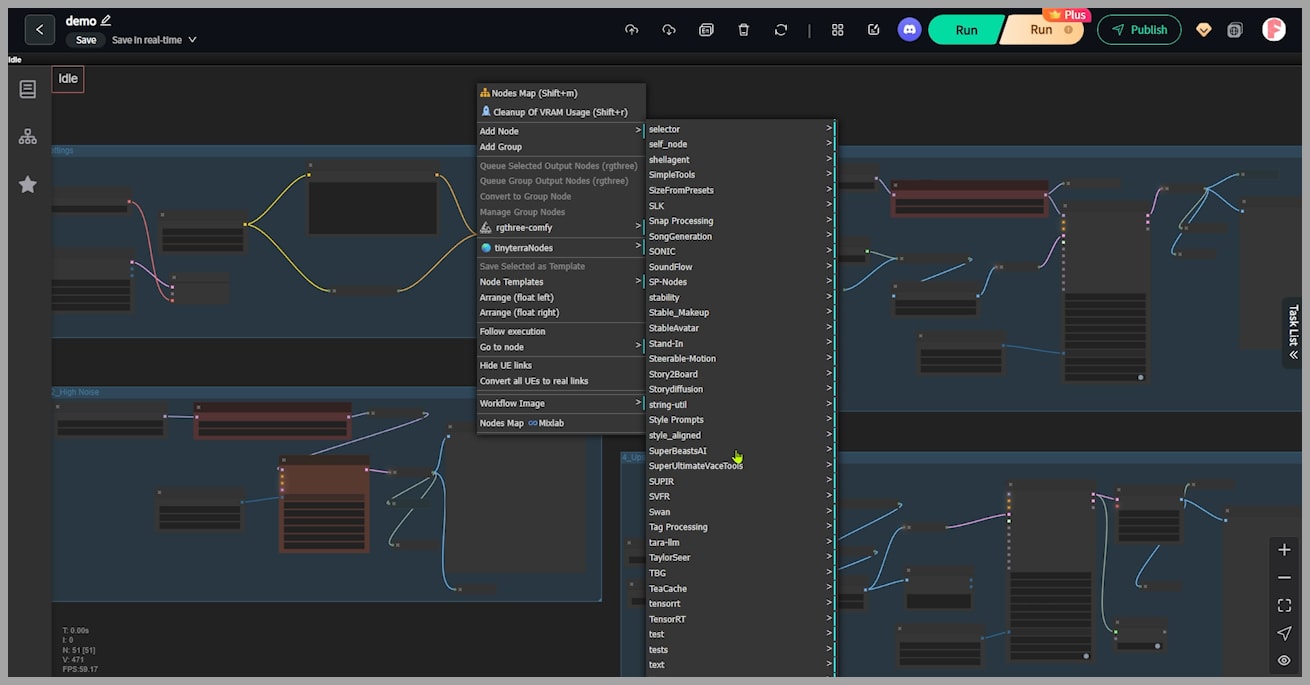

You can dive straight into testing your workflows without spending hours on setup. RunningHub’s platform comes loaded with nearly every node pack and model you might need, so you’re always ready to create. Even better, they keep things updated with the newest features, which means you won’t have to search for missing nodes.

Advanced User Advantages

For advanced users, cloud-based ComfyUI offers flexibility for heavy testing and resource-heavy workflows. Sometimes you need to push your workflows to their limits—whether it’s for quality control or to ensure stability under demand. That’s where cloud GPUs come in handy.

Platforms like Runpod provide access to GPUs with massive VRAM (up to 180GB!), so you can test workflows under different conditions without worrying about running out of GPU power.

Plus, cloud-based services enable easier troubleshooting. For example, if something breaks in a workflow, it’s easier to reset or start fresh in the cloud, avoiding the hardware limitations of your local machine.

RunningHub: Streamlined Simplicity

RunningHub takes the complexity out of running ComfyUI in the cloud by offering a completely ready-to-use environment. As a beginner, or even a pro who wants to skip setup time, RunningHub is incredibly convenient.

Everything you need is pre-installed: the software, the models, and most of the nodes you’ll likely use.

There’s no need to fiddle with installations, configurations, or even downloads—everything is set up for you. This makes it super simple to jump straight into building and running workflows.

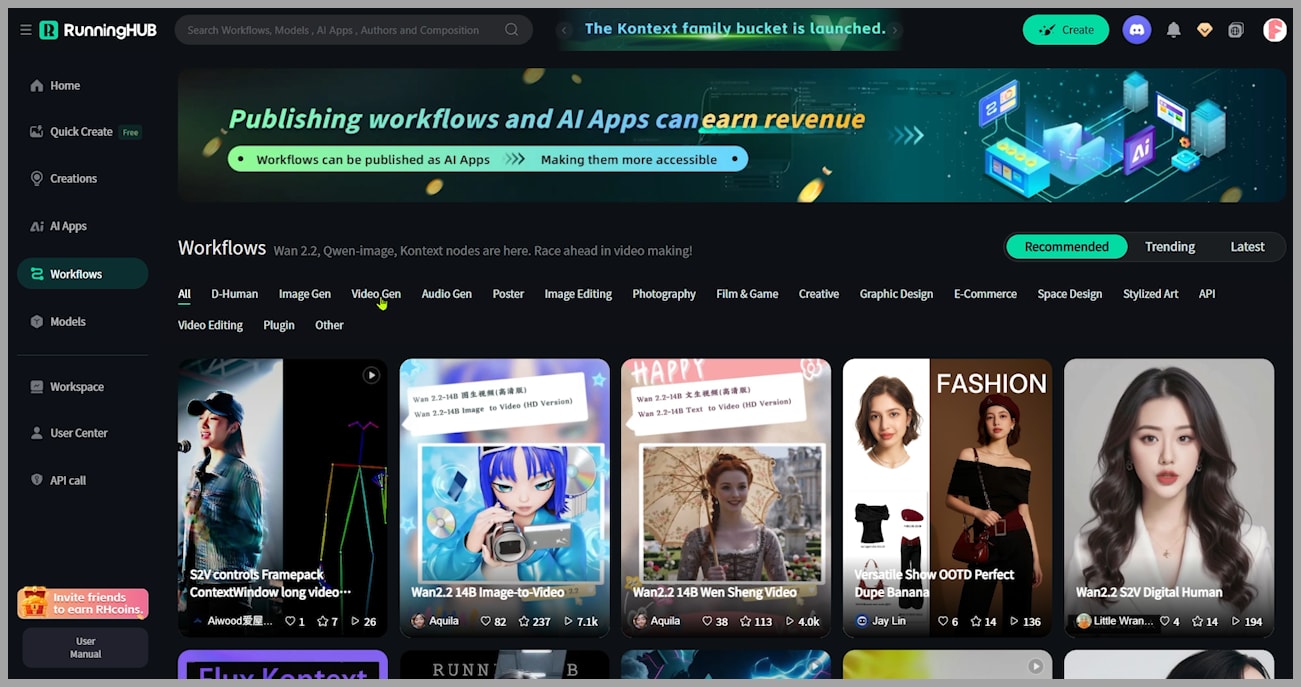

One of the standout features of RunningHub is its workflow library. The platform has a large collection of user-uploaded workflows that you can use right away. These workflows cover a wide range of use cases and are continuously updated, ensuring that you have access to the latest tools.

Whether you’re experimenting with AI-generated images or running custom models, you’ll find workflows that fit your needs—without the hassle of manual setup.

Additionally, RunningHub uses a credit-based system, where you pay for actual usage. You don’t get charged unless you’re running a workflow, which means you’re only paying for results, not for idle time.

Runpod: Custom Control

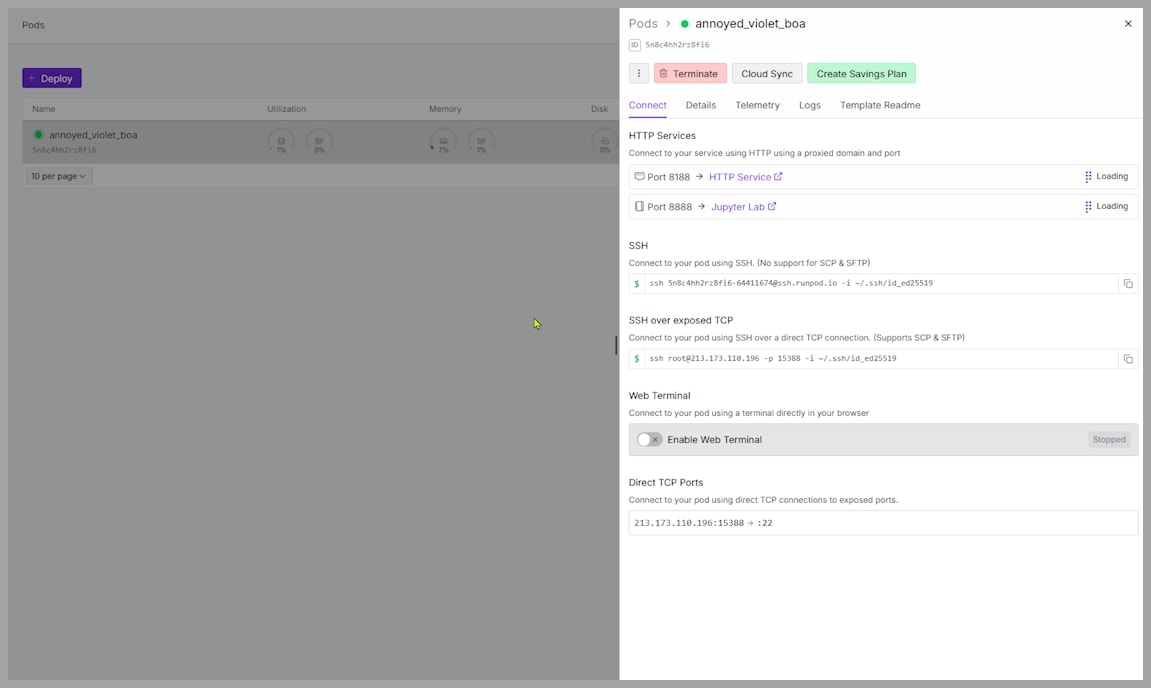

Runpod offers a completely different approach to running ComfyUI in the cloud. Instead of providing a ready-to-go environment like RunningHub, Runpod gives you complete control over your virtual machine (VM) and the environment you set up.

With Runpod, you rent a GPU server by the hour, giving you full flexibility. You choose which GPU to use, the server specs, and how you install ComfyUI and its required models or nodes. This is ideal if you prefer more hands-on control and want to tailor everything to your specific needs.

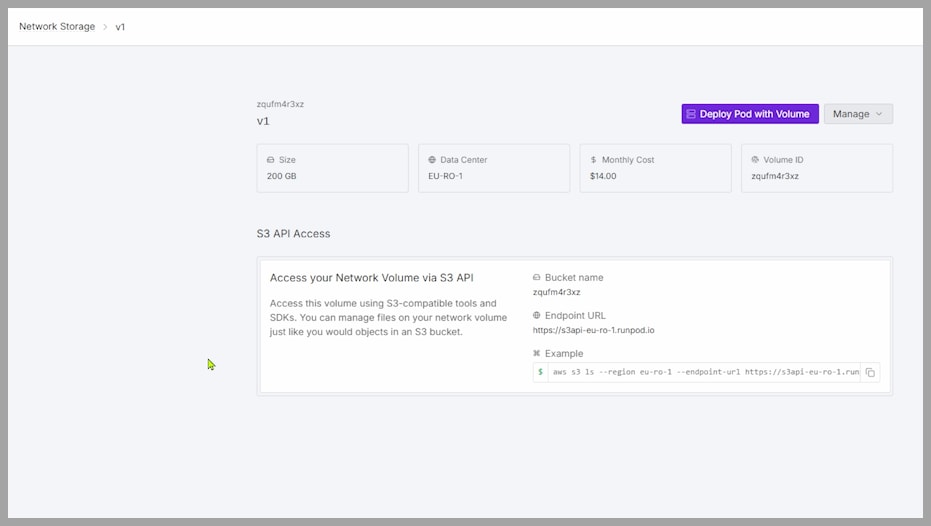

For storage, Runpod utilizes Network Storage, which allows you to rent additional server space to store your models and data. This is convenient because your data persists even when the GPU server is turned off, as long as you don’t delete it.

However, unlike RunningHub, this flexibility comes with a steeper learning curve. You need to have some technical know-how to install ComfyUI and manage dependencies. If you’re new to Linux servers or configuring AI environments, Runpod may require a bit more time to set up and manage.

Key Differences: Runpod vs. RunningHub

Both Runpod and RunningHub provide powerful cloud solutions for running ComfyUI, but they operate very differently. Let’s dive into the core differences: setup and resource management, pricing structures, and technical demands.

Setup & Resource Management

- RunningHub excels in its ready-to-use environment. All the software, nodes, and models are pre-installed. This setup eliminates the need for any manual configuration, which is a huge time-saver. You can start using ComfyUI right away, making it incredibly beginner-friendly.

- Runpod, on the other hand, gives you complete control over your environment. You rent a GPU server and manage the installation of ComfyUI and nodes yourself. This flexibility is great for advanced users who want a customized environment, but it does require more effort upfront. You’ll need to manually configure your system and deal with dependency management, which can sometimes be challenging if you’re not familiar with the process.

Pricing Structures

- Runpod uses a pay-as-you-go model. You’re charged for the GPU server rental and the Network Storage used. This means you only pay for the time you actually use the GPU, which is ideal for users who need to scale their usage based on demand. However, this can become expensive if you leave a server running for long periods, even if you’re not generating outputs.

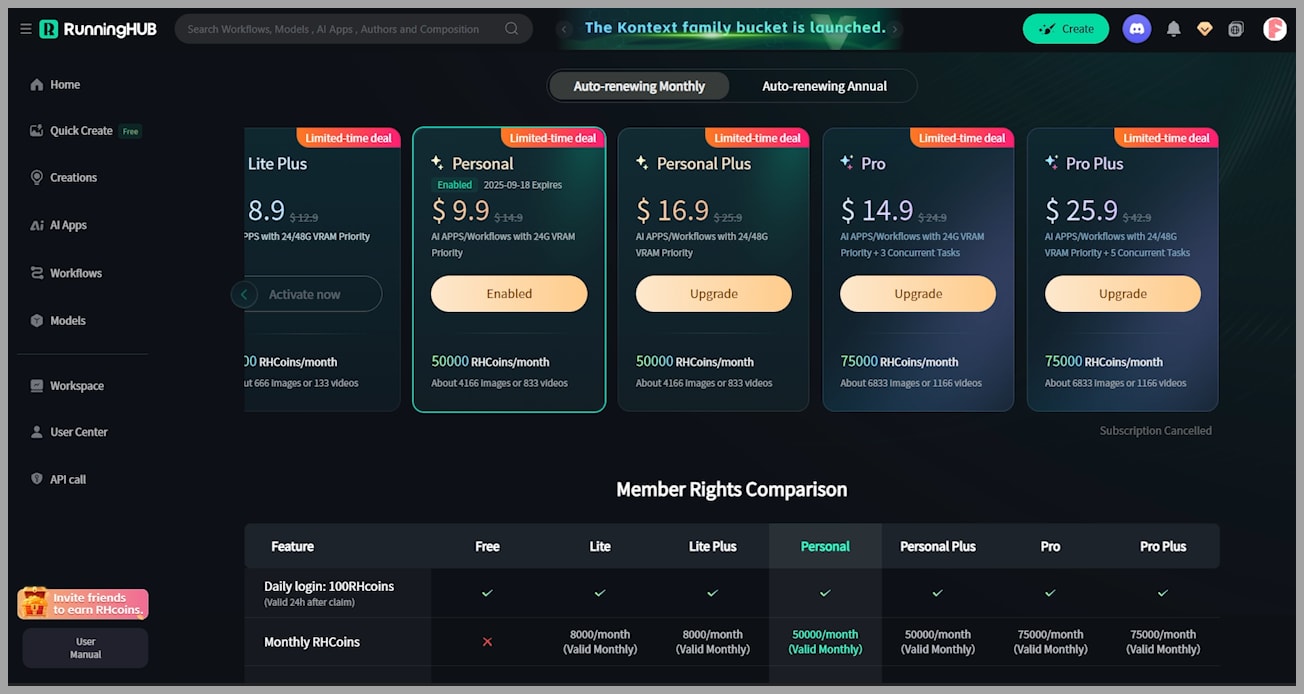

- RunningHub follows a subscription-based pricing model. The most common plan is the Personal plan at $9.99/month, which provides 50,000 credits. You can generate approximately 4,166 images or 833 videos with these credits, depending on the complexity of your workflows. The key advantage here is that you’re only charged when you run a workflow, not while tinkering or setting things up. This can be much cheaper than Runpod for users who spend a lot of time building workflows but don’t always run them.

Accessibility & Technical Demands

- RunningHub is incredibly user-friendly. With everything pre-installed, there’s no need to worry about complex terminal commands or installation processes. It’s designed for users who want a straightforward experience, especially those who don’t want to dive into the technicalities of Linux servers or ComfyUI setup.

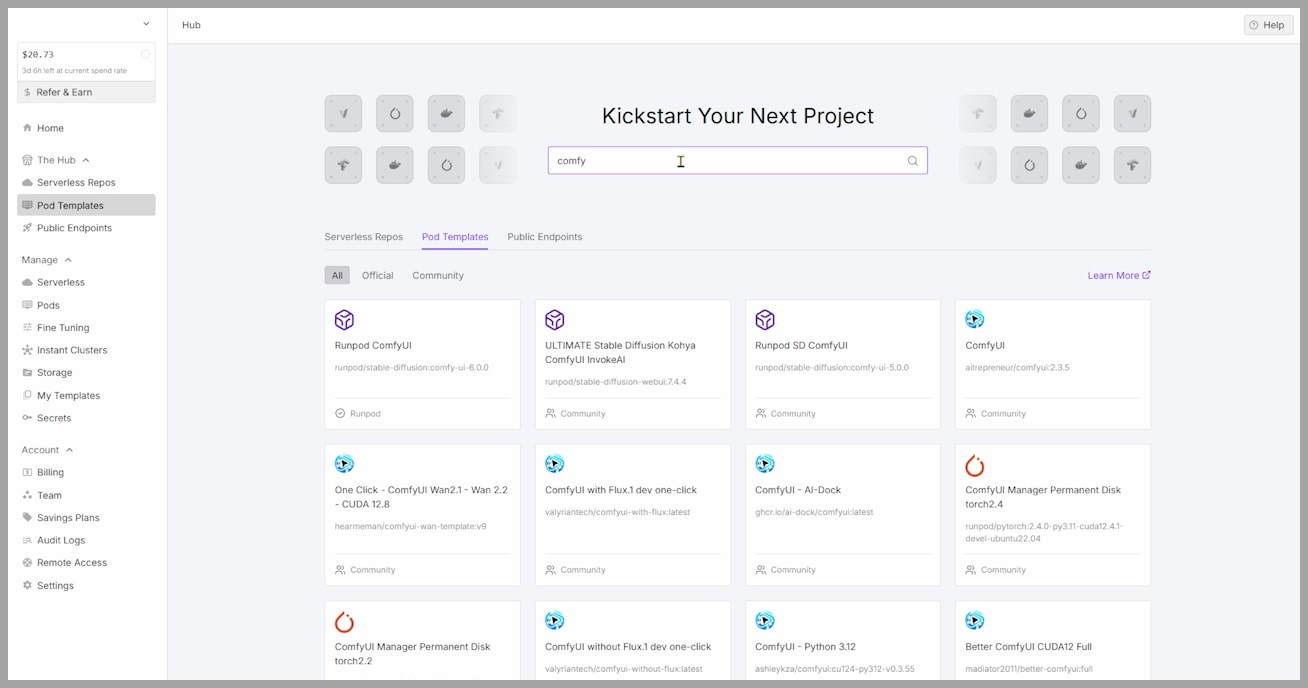

- Runpod has a steeper learning curve. You’ll need to handle server rentals, install the necessary software, and configure dependencies. While templates are available to simplify the process, some of them can be outdated or unreliable, which can lead to additional frustration. Users with a solid understanding of Linux and Python will find Runpod’s flexibility rewarding, but it may be overwhelming for beginners.

Pricing Deep Dive

Cloud costs hinge on how (and how often) you work. Here’s what that looks like for each platform.

Runpod’s Fees

Runpod is pay-as-you-go, split into two meters:

- GPU server rental:

- You’re billed while the server is running—even if you’re just analyzing or wiring up a workflow before hitting “generate.”

- Flexibility is the draw: pick exactly the GPU tier you need (from mid-range up to very high-VRAM cards) and scale up or down per session.

- Network Storage (persistent models/data):

- Your models live on separate, persistent storage so they survive server shutdowns.

- Billed in 5-minute increments by capacity and duration.

- Ballpark: ~$14/month for 200 GB.

Who saves with Runpod?

- Power users who run long, compute-heavy batches and want total OS-level control.

- Teams/individuals who value persistent model repos and a custom stack.

Who pays more?

- Tinkerers who leave instances running while they iterate (idle time still bills).

- Anyone spending lots of time building vs generating.

Runpod: https://runpod.io?ref=jden1933

RunningHub: https://www.runninghub.ai/?inviteCode=rh-v1241

RunningHub’s Credits

RunningHub is subscription-based and pay-on-generation:

- The popular Personal plan is $9.99/month for 50,000 credits.

- Roughly yields ~4,166 images or ~833 videos (actual cost varies by workflow).

- Critically, you’re not charged while building, inspecting, or tweaking a graph—credits only burn when you click Run.

- Massive, shared model library means no separate storage fees for common models; you can also upload private LoRAs.

Who saves with RunningHub?

- Curious builders who spend lots of time experimenting, studying graphs, and iterating between runs.

- Anyone who appreciates predictable monthly budgeting and no storage line item.

Who pays more?

- Users who constantly generate at scale and may exceed the monthly credit bundle.

Limitations to Consider

While both platforms offer powerful cloud solutions for ComfyUI, each comes with its own set of limitations that could impact your workflow depending on your needs.

RunningHub’s Cache Constraints

One of the main limitations of RunningHub is the shared server environment. Since you’re often using a server shared with other users, the cache is cleared after each workflow run. This means if you make a small tweak to a workflow and re-run it, you’ll often have to start from scratch. This can be particularly frustrating with complex or resource-heavy workflows that take a significant amount of time to process.

For workflows that require extensive computation or large datasets, this can result in inefficiencies and longer processing times, especially if you frequently need to adjust and re-run parameters.

Runpod’s Complexity Barriers

Runpod is built for users who want more control, but that control comes with complexity. The most notable challenge is the setup process—installing ComfyUI and all the required nodes can be a hassle, especially if you’re new to server management or Linux environments.

Even though templates are available to streamline installation, many of them are outdated or unreliable. Sometimes, they don’t play well with newer versions of Python or specific tools, and troubleshooting can eat up a lot of your time.

If you’re looking for a quick and simple solution, Runpod might feel more like a barrier than a benefit. For optimal performance, you’ll often need some technical skills in Linux and Python, making it less beginner-friendly compared to RunningHub.

Conclusion & Recommendations

Both Runpod and RunningHub provide unique advantages for running ComfyUI in the cloud, but the best option depends on your specific needs and experience level.

- RunningHub is ideal for beginners or anyone who values simplicity and convenience. Its pre-installed environment, easy-to-use interface, and shared model library make it a great choice if you want to dive right into creating workflows without the headaches of setup and configuration. The credit-based pricing ensures you only pay for what you use, which is perfect if you’re experimenting and don’t need to run intensive workflows frequently. However, the shared servers and the cache constraints can be limiting for complex, long-running workflows.

- Runpod, on the other hand, is a better fit for advanced users or those who need complete control over their cloud environment. The ability to rent specific GPUs and manage your installation process offers a great deal of flexibility, especially for customized workflows and large-scale projects. While Runpod’s setup and management are more complex, the pay-as-you-go model makes it appealing for users who need high-performance GPU power on demand. The downside is that Runpod can be frustrating for those not familiar with Linux and server management.

Runpod: https://runpod.io?ref=jden1933

RunningHub: https://www.runninghub.ai/?inviteCode=rh-v1241