Tired of OpenAI’s Code Interpreter Limitations? Open Interpreter on GitHub to the Rescue

You don’t have to be a programming wizard to dive into data analytics anymore. Thanks to OpenAI’s Code Interpreter, a feature available to their paid subscribers, a wave of non-coders have been catapulted into the realm of professional data analysts.

Initially, this revolutionary tool went by an obscure name, which was later revamped to “Advanced Data Analysis.” However, to keep things clear and to the point in this article, let’s continue to call it a “Code Interpreter.”

The prowess of this Code Interpreter is awe-inspiring—so much so that it’s often heralded as “GPT-4.5” by its user community. Imagine this: you’re faced with a table full of data. All you have to do is simply tell ChatGPT what you want to analyze, using everyday human language.

Voila! The Code Interpreter transforms into your personal coding assistant, generating Python code that unravels the data for you. It’s almost like having a data scientist at your beck and call! Furthermore, it boasts a versatile range of capabilities, including batch processing for documents, images, videos, and more.

However, it does come with its fair share of limitations. One such limitation is the need to upload files to the ChatGPT interface, which can sometimes prove cumbersome.

For instance, when attempting to perform batch renaming of files within a folder, the process entails uploading the files to ChatGPT. Subsequently, ChatGPT generates the necessary code to execute the renaming task, requiring users to then download the modified files.

Today, I’m excited to share something groundbreaking—Open Interpreter, a free open-source project that tackles some of the above limitations. With Open Interpreter, you can run the code interpreter right on your local machine. Just fire up your terminal, and you’re good to go. You interact directly, eliminating the hassle of file uploads and downloads.

Project Description

Excitingly, Open Interpreter leverages the power of Large Language Models to perform a plethora of tasks right on your local machine.

Whether it’s Python, JavaScript, or Shell, you name it! In essence, think of it as a robust Code Interpreter, but with far-reaching capabilities.

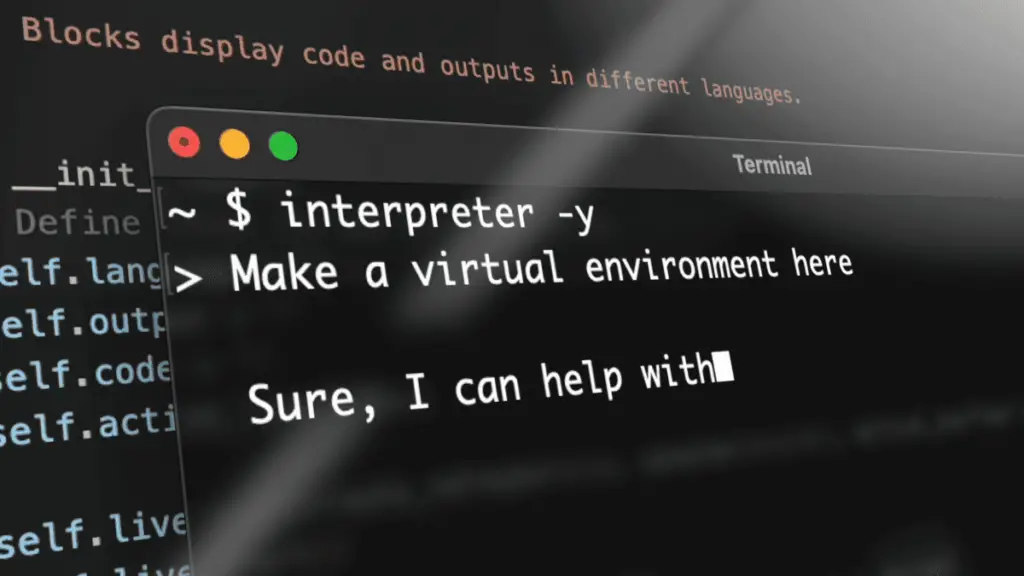

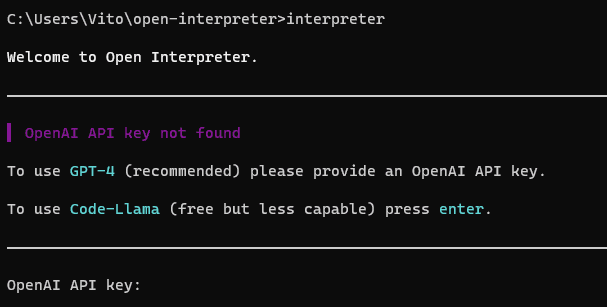

Just type the command “interpreter” into your terminal, and voilà—a user-friendly chat interface, akin to ChatGPT, springs to life!

The real game-changer here is its data analysis feature. But let’s not pigeonhole it as just another Code Interpreter; Open Interpreter transcends that boundary.

It’s network-enabled, allowing you to work with local files without having to upload them for analysis. Stuck missing some Python libraries? No worries, it can easily download them automatically.

Moreover, while Code Interpreter constrains you with file-size limits, Open Interpreter shatters those barriers. It can interact with files of any size on your computer. Imagine the convenience! For example, it can:

- Add watermarks to PDFs in batch

- Merge and split PDFs en masse

- Extract tables and images from multiple PDFs

- Set password protection for numerous PDFs

- Convert multiple document formats in one go

The versatility doesn’t stop there. Open Interpreter can also work wonders with image manipulation:

- Transform images into GIF animations

- Add watermarks to images

- Perform face recognition

- Reduce image noise

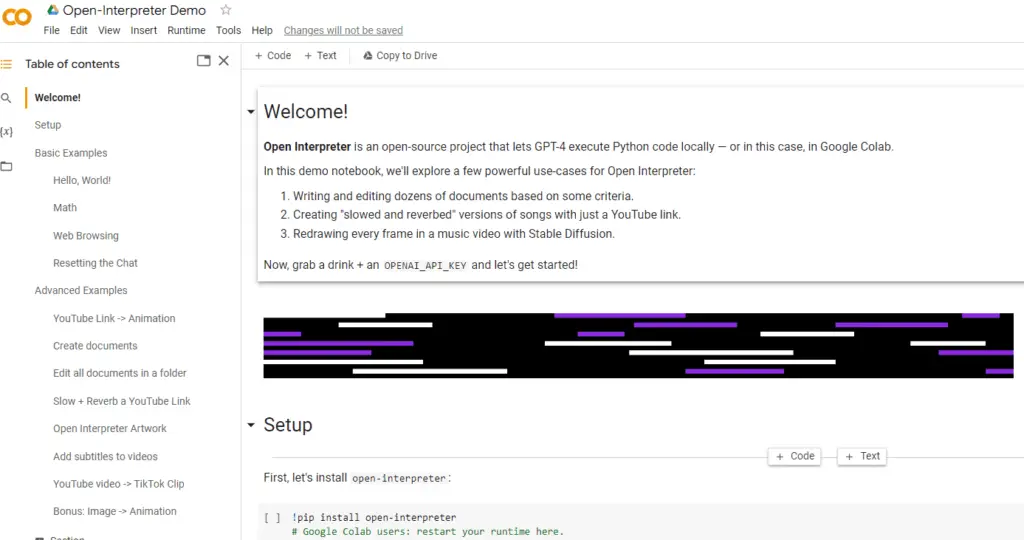

If you’re not a fan of local setups, you’ll be pleased to know that Open Interpreter is also accessible via Google Colab. They even offer a variety of ready-to-use cases, which you can check out here.

Installation Instructions

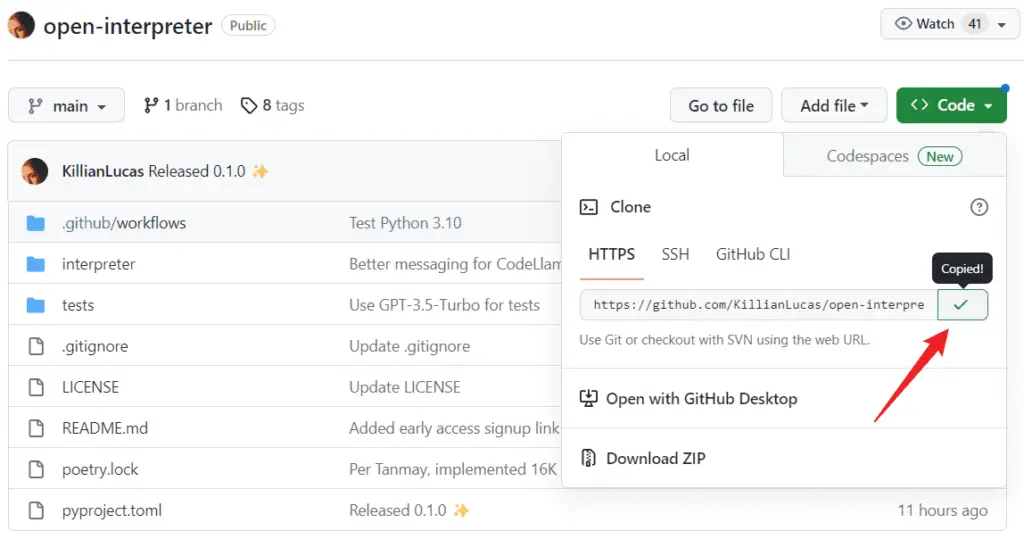

1️⃣Initial Steps: Before diving in, make sure Python and Git are installed on your computer. Then, launch a terminal and clone the project onto your machine.

2️⃣Navigate: Move into the project’s folder.

cd open-interpreter3️⃣Installation: Install the project effortlessly with the pip command.

pip install open-interpreter4️⃣Getting Started: Type interpreter to summon the interaction page, where the real magic begins.

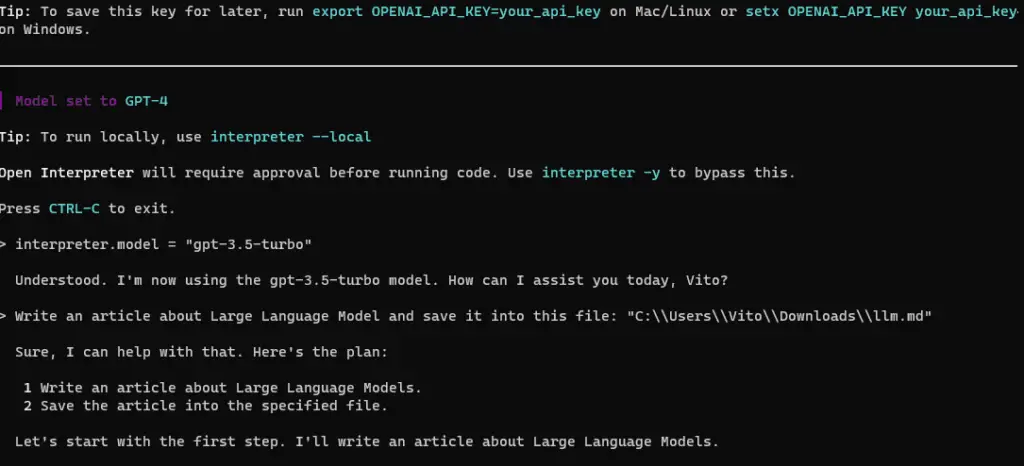

5️⃣API Key: If you’re keen on using GPT-4 (highly recommended) or GPT-3.5-Turbo, you’ll need to paste your OpenAI API key into the terminal. Or, set it as an environment variable when prompted.

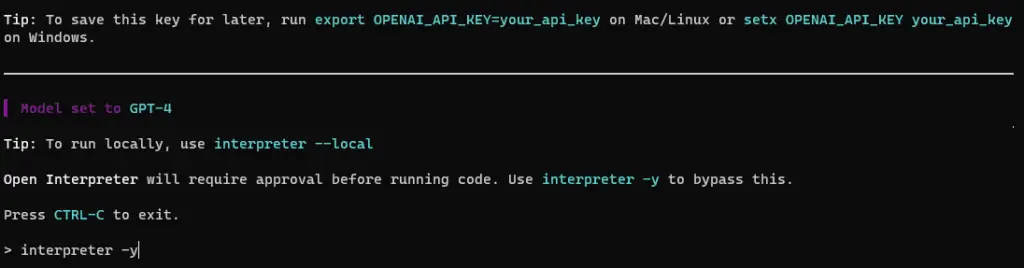

6️⃣Security Measures: Open Interpreter will ask for your confirmation every time it executes code—security first! If you find this repetitive, you can skip confirmations by typing interpreter -y or setting interpreter.auto_run = True.

7️⃣Model Choice: By default, GPT-4 is in the driver’s seat. To switch to gpt-3.5-turbo, simply type interpreter --fast. If you’re in a Python environment, use interpreter.model = "gpt-3.5-turbo".

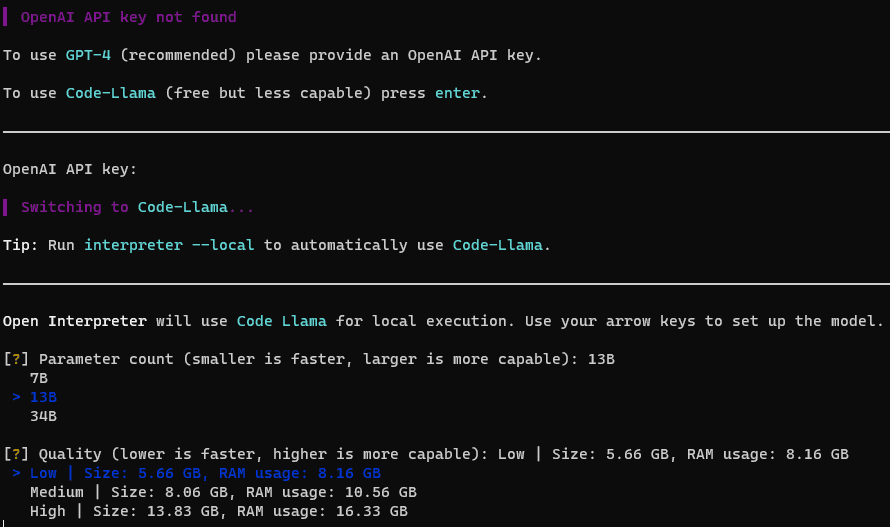

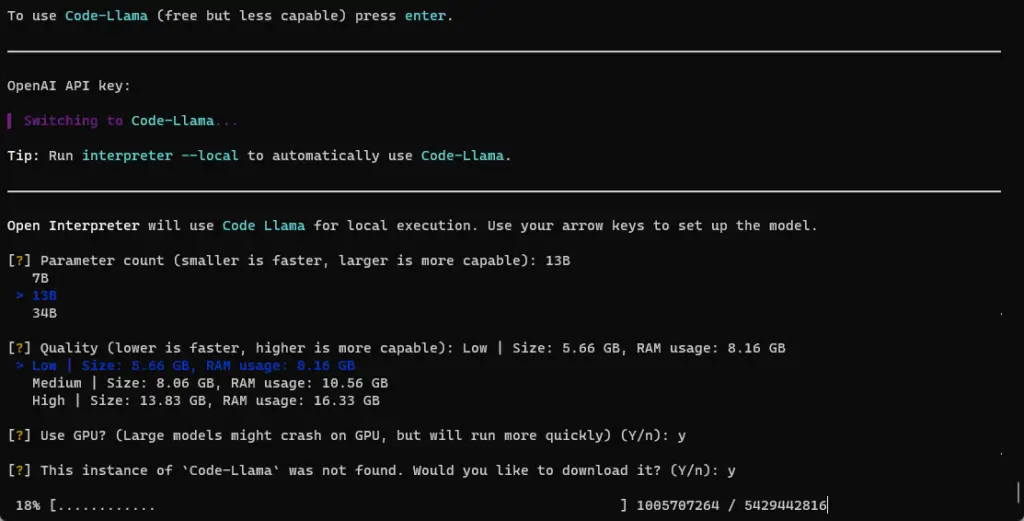

8️⃣Fallback to CodeLlama: No API Key? No problem! Open Interpreter will default to the open-source CodeLlama. If this is your first time, you’ll be prompted to choose a version based on size and quality. Your options are:

- 7B Model:

- Low Quality (3.01 GB)

- Medium Quality (4.24 GB)

- High Quality (7.16 GB)

- 13B Model:

- Low Quality (5.66 GB)

- Medium Quality (8.06 GB)

- High Quality (13.83 GB)

- 34B Model:

- Low Quality (14.21 GB)

- Medium Quality (20.22 GB)

- High Quality (35.79 GB)

9️⃣Offline Setup: If your network isn’t the most reliable, you can download these models locally and place them in the folder "C:\Users\<your username>\AppData\Local\Open Interpreter\Open Interpreter\models".

🔟Subsequent Use: The next time you fancy CodeLlama, just type interpreter --local.

There you go! A step-by-step guide to get you up and running with Open Interpreter, an incredible tool that offers both flexibility and power in tackling real-world tasks.

My User Experience

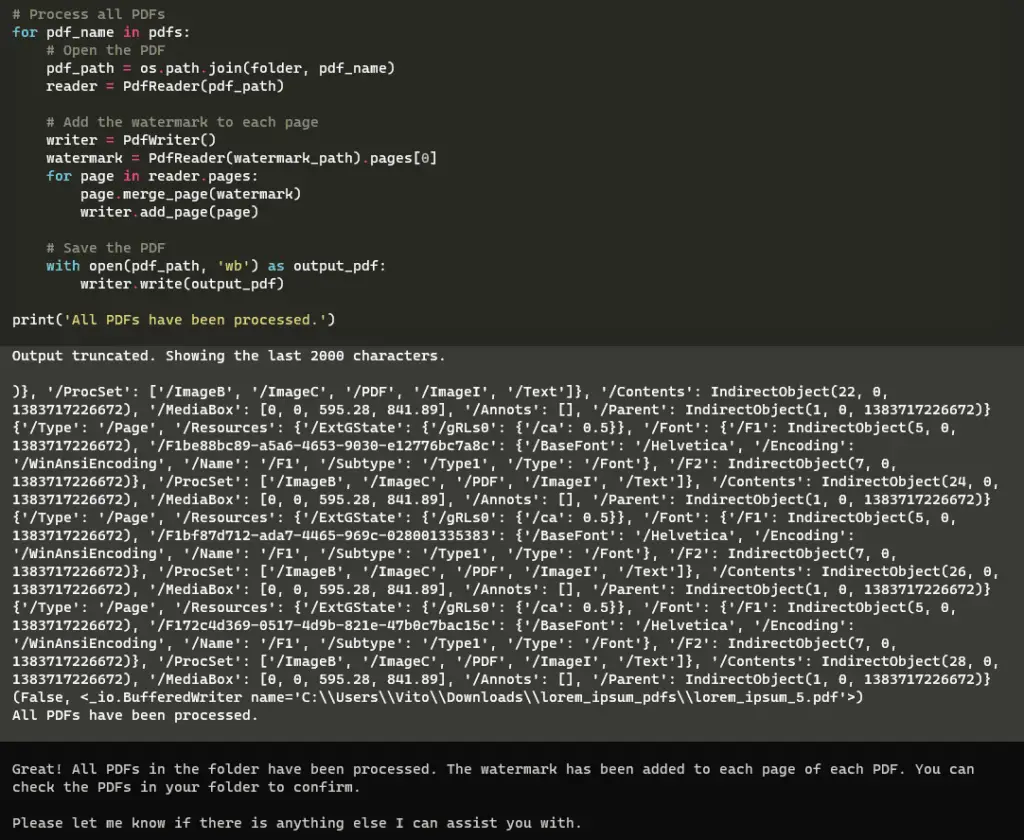

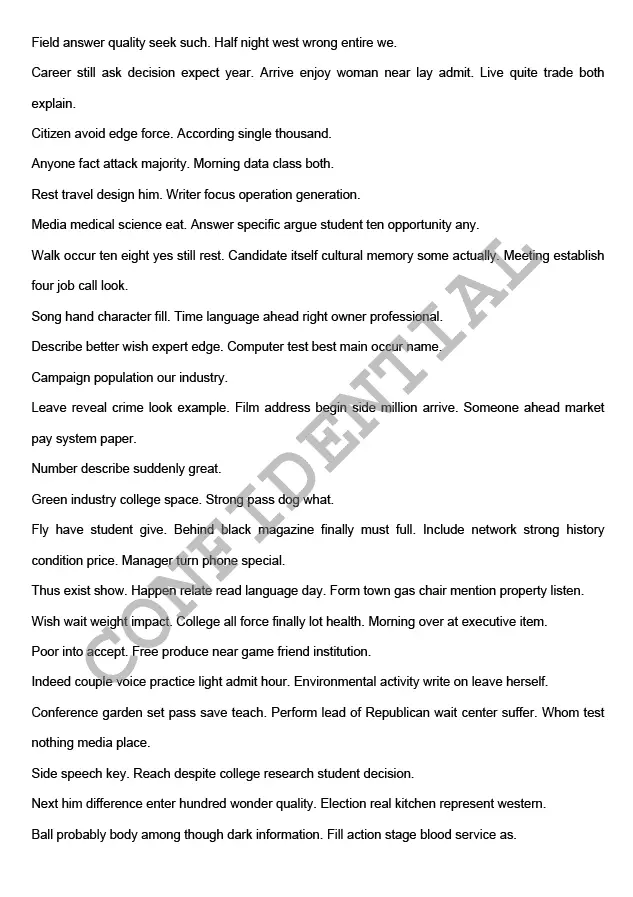

Imagine my joy when Open Interpreter flawlessly executed a complex task I had in mind: watermarking an entire folder of PDFs. Here’s what the prompt looked like:

Please add a diagonal watermark to the center of each page in multiple PDFs found in the folder ("C:\\Users\\Vito\\Downloads\\lorem_ipsum_pdfs"). The watermark should be scaled to 75% of the target page size. The content of the watermark should be "credential" written in Courier-Bold font, gray color, with 50% opacity.After a bit of debugging, the job was done, right to my specifications.

While the task did require installing multiple Python libraries, here’s the catch: Unlike OpenAI’s Code Interpreters, these libraries are now stored on my local computer, ready for future use. No re-installation needed!

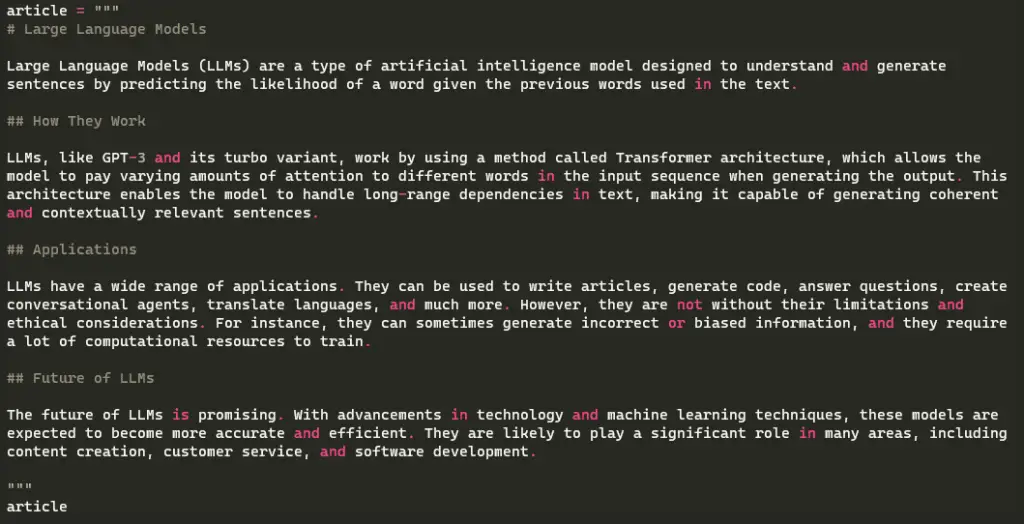

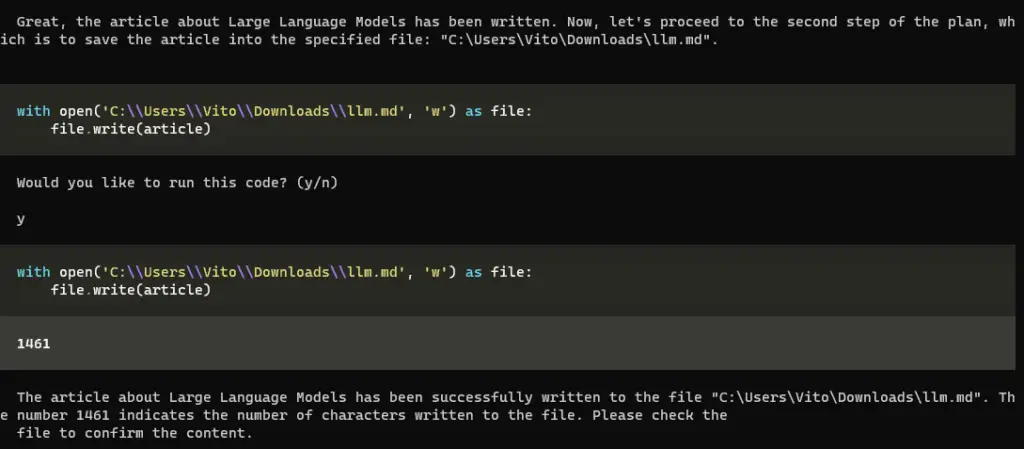

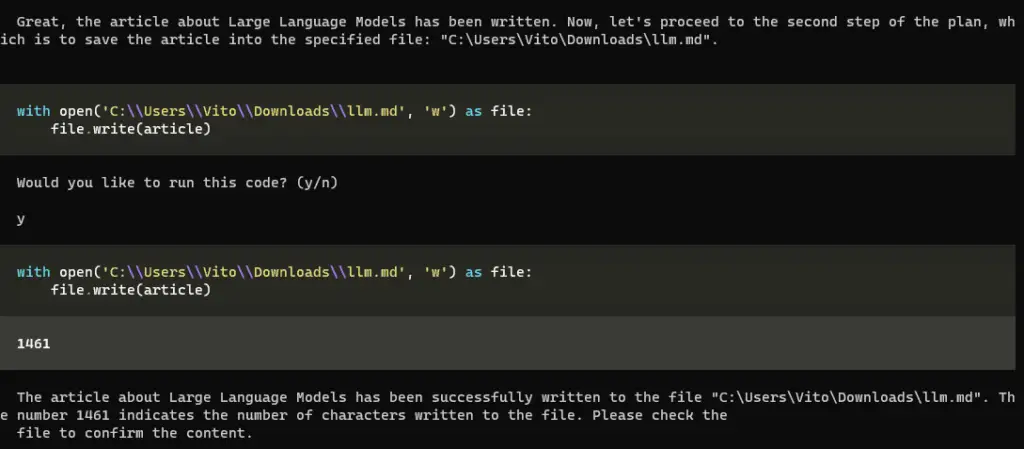

What about the cost? Given that GPT-4 API isn’t exactly cheap, I decided to experiment with gpt-3.5-turbo for simpler tasks. And voilà! I asked it to draft an article about large language models and save it as a Markdown file.

The moment of truth came when I opened Typora—there it was, an article written just for me.

These are merely the tips of the iceberg when it comes to what Open Interpreter can do. For those looking to push the boundaries even further, you might want to check out the Google Colab notes I mentioned earlier. The sky’s the limit, folks!