Midjourney vs DALL-E vs Stable Diffusion: Which One Nails the Human Pose?

The recent release of Midjourney V6 has sparked a wave of excitement, its hyper-realistic images almost making traditional photography seem obsolete.

However, those who have dabbled in the realm of AI art creation are familiar with a persistent shortcoming that isn’t disappearing anytime soon.

Today, let’s embark on an exploratory project, putting the leading AI image generators (Midjourney, DALL-E, and Stable Diffusion) to the test with a specific challenge: replicating a particular yoga pose from a photograph sourced from the Unsplash image library.

DALL-E’s Attempt

Kicking off this experiment, we turn to DALL-E. To capture every nuance of the yoga pose, I crafted a detailed prompt:

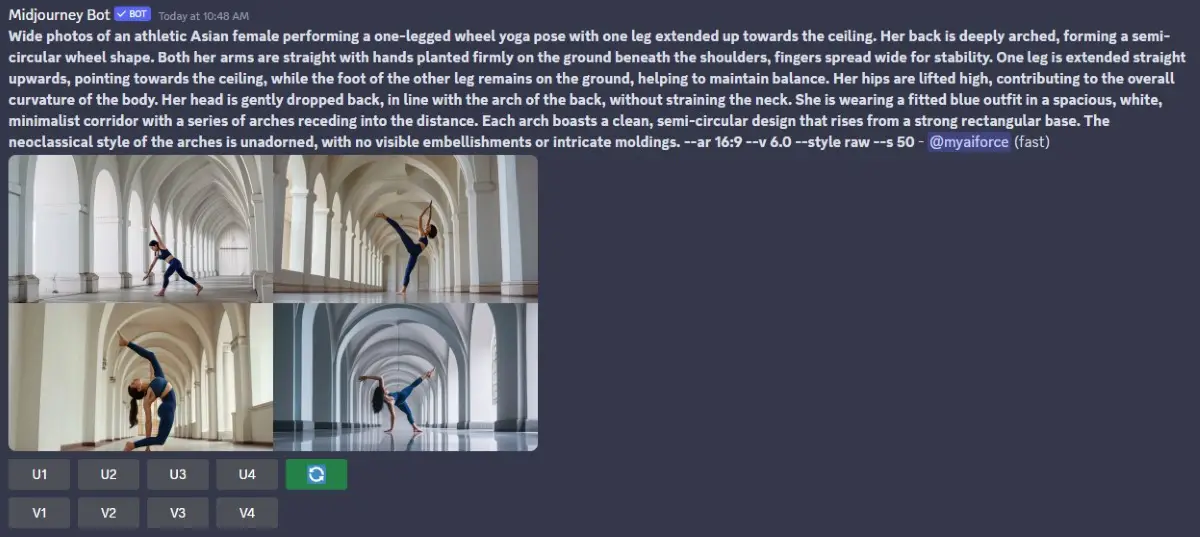

Prompt: Wide photos of an athletic Asian female performing a one-legged wheel yoga pose with one leg extended up towards the ceiling. Her back is deeply arched, forming a semi-circular wheel shape. Both her arms are straight with hands planted firmly on the ground beneath the shoulders, fingers spread wide for stability. One leg is extended straight upwards, pointing towards the ceiling, while the foot of the other leg remains on the ground, helping to maintain balance. Her hips are lifted high, contributing to the overall curvature of the body. Her head is gently dropped back, in line with the arch of the back, without straining the neck. She is wearing a fitted blue outfit in a spacious, white, minimalist corridor with a series of arches receding into the distance. Each arch boasts a clean, semi-circular design that rises from a strong rectangular base. The neoclassical style of the arches is unadorned, with no visible embellishments or intricate moldings.

DALL-E’s results, after a few tries, were remarkable in replicating the pose with near-perfect accuracy.

Yet, the overall image lacked depth and texture, and the subject’s movement felt somewhat artificial.

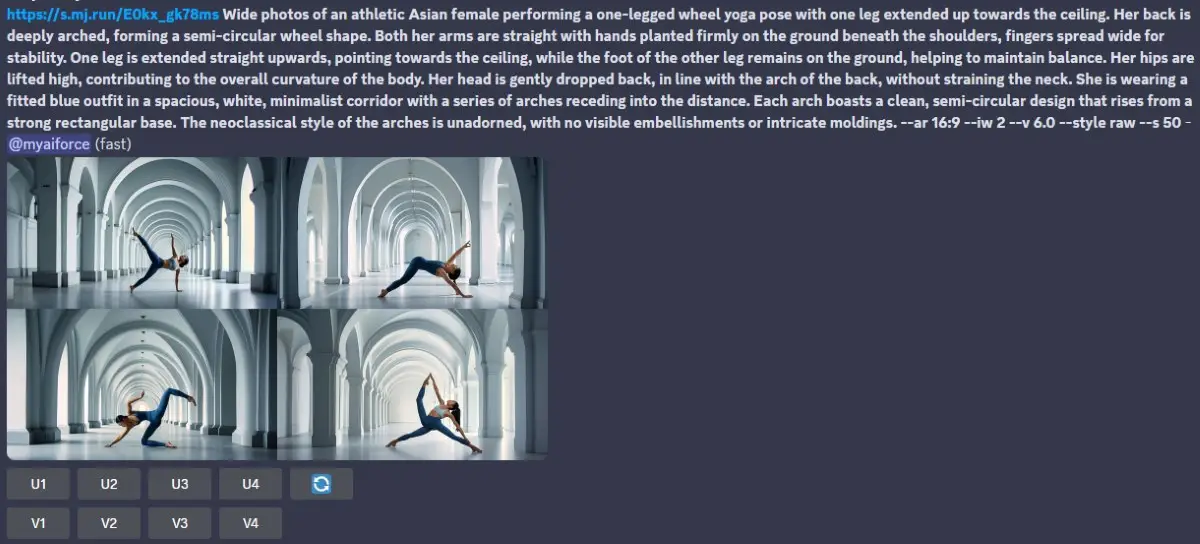

Midjourney’s Interpretation

Next, let’s see how Midjourney V6 fares:

Repeated attempts with Midjourney resulted in motion distortion—a problem less pronounced in DALL-E’s output. Even when using the Unsplash image as a reference, Midjourney struggled to match the standard.

However, Midjourney excelled in capturing the scene’s essence and imbued the lighting with a captivating ambiance.

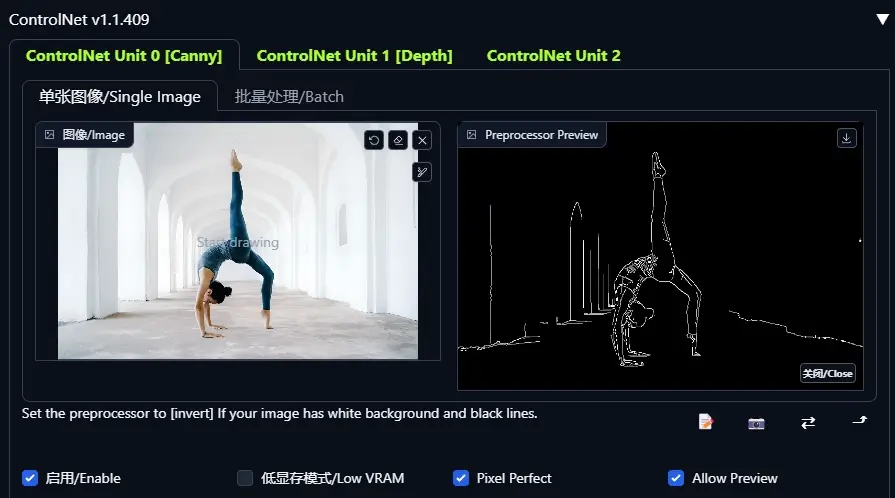

Stable Diffusion’s Approach

Stable Diffusion, admittedly weaker in understanding natural language, requires prompts akin to those for Midjourney v5.2. Solely relying on text prompts for yoga pose images leads to even more pronounced distortions than with Midjourney.

However, Stable Diffusion boasts a saving grace: the ControlNet extension. This feature allows me to use Unsplash images as a reference within Stable Diffusion. The combination of Canny and Depth allows for more precise control over the composition and depth, respectively.

With some tweaks to the prompt, I altered the color of the yoga outfit and transformed the concrete floor into wood. Some inpainting was necessary to refine the subject’s facial features, though minor distortions remained.

For a more natural look, I merged the face and hands from the original Unsplash photo using Photoshop, culminating in the following image.

This final image has a slightly reddish tint and could benefit from further color correction for added detail.

Concluding Thoughts

While Midjourney and Stable Diffusion show potential for mimicking reality, they still require auxiliary tools like Photoshop to iron out imperfections.

Nonetheless, the rapid evolution of AI in this field is staggering, with numerous successful applications already in motion. It’s an exciting time to delve into the possibilities of AI art creation.