ChatGPT vs. Claude 2: A Comprehensive Comparison of Two Leading Language Models

As one of the early seed users of ChatGPT, I’ve witnessed its evolution from ChatGPT 3.5 to ChatGPT 4, including the development of various ChatGPT plugins.

Recently, I have also experimented with Claude 2 and find it interesting to delineate the differences between these models.

This article intends to provide a comprehensive comparison of ChatGPT 3.5, ChatGPT 4, and Claude 2, analyzing them through different angles and real-life instances.

Introduction to ChatGPT and Claude 2

In the swiftly progressing artificial intelligence domain, OpenAI’s ChatGPT and Anthropic’s Claude 2 have become prominent. Both function as AI chat assistants, utilizing human-like language to assist users with inquiries and challenges in work and personal life.

ChatGPT has paved the way in large language models. Launched on November 30, 2022, it quickly attracted over a million users within 5 days. Further developments followed in 2023, enhancing the ChatGPT Plus features and integrating third-party services.

Claude 2, introduced by Anthropic in July 2023, stands as a competitor to ChatGPT. Enhanced from its predecessor, it offers a deeper context understanding and can handle longer texts with an updated knowledge base.

Text Lengths and Token Limits

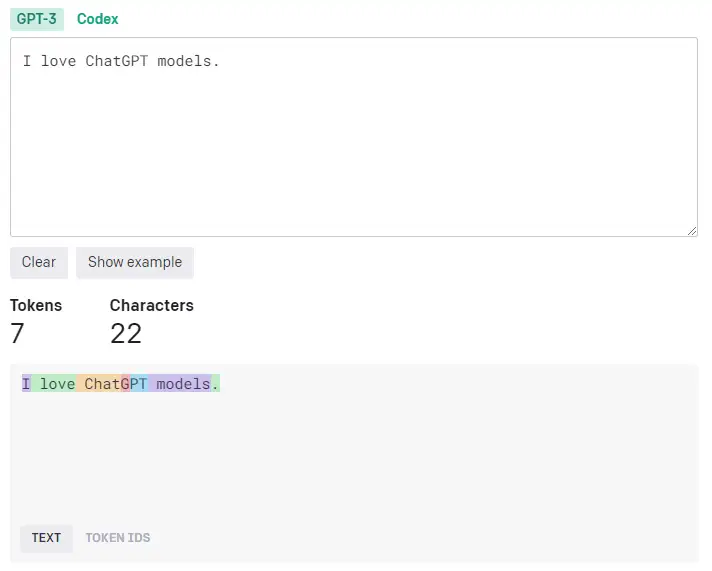

The measurement of text length in large models like ChatGPT is unique. Instead of character or word counts, they employ tokens. For example, “I love ChatGPT models.” would be broken down into seven tokens: “I”, “love”, “Chat”, “G”, “PT”, “models”, “.” .

The token count roughly corresponds to 4 characters. So, 1000 tokens would be akin to around 750 words.

Token limits exist in all large language models. An excessive number of tokens in ChatGPT 3.5 leads to an error, necessitating a reduction in the word count or other adjustments.

Comparatively, the token limits are as follows:

- ChatGPT 3.5: 4096 tokens (~3072 English words)

- ChatGPT 4: 8000 tokens (~6000 English words)

- Claude 2: Up to 100,000 tokens

From the available data, Claude 2 stands out for its proficiency in summarizing extensive texts. But what is the underlying mechanism? Let’s delve into a real-world example.

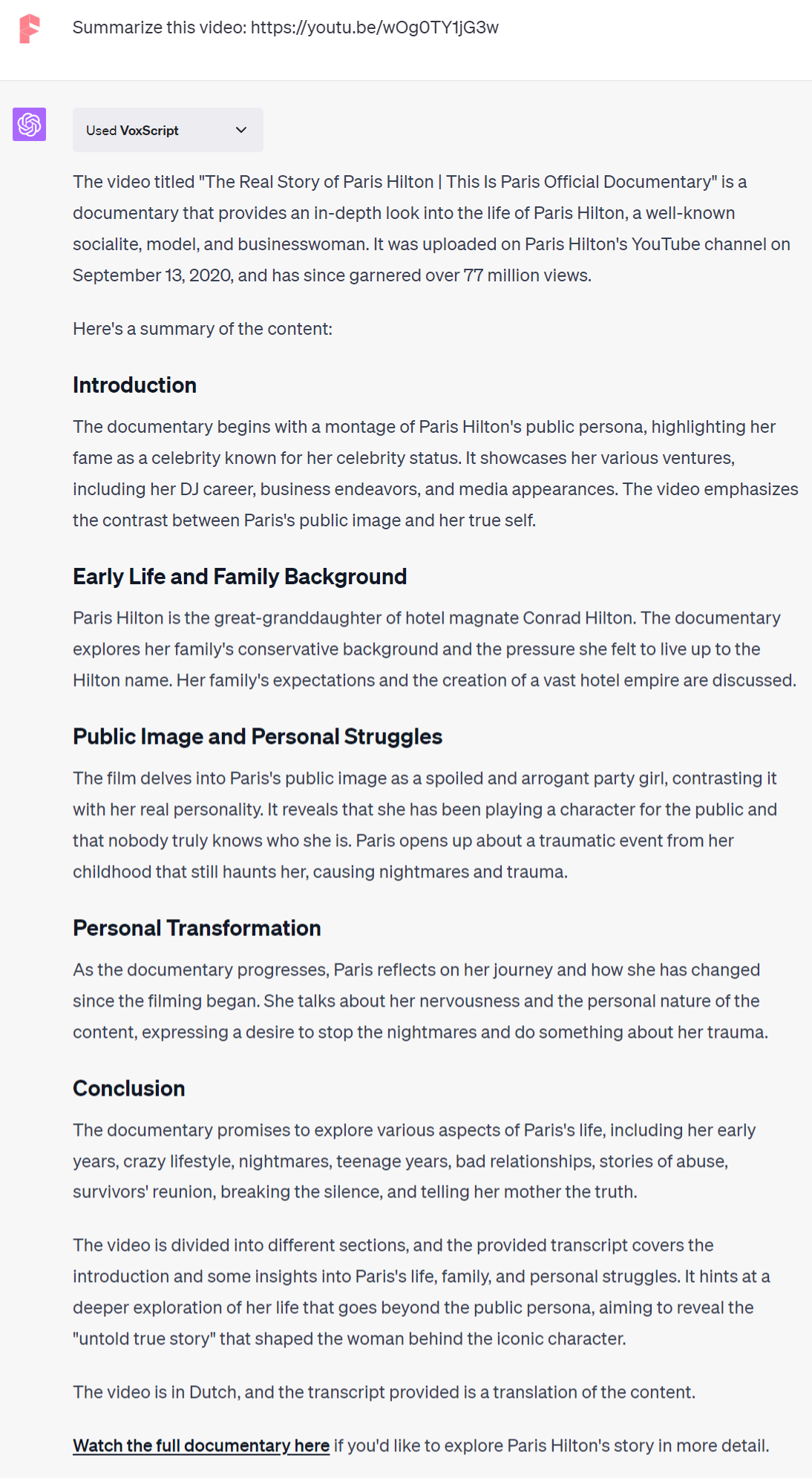

In a previous article, I employed ChatGPT, paired with the VoxScipt plugin, to condense a 24,000-word video transcript of a documentary about Paris Hilton.

ChatGPT’s summary output can be found below:

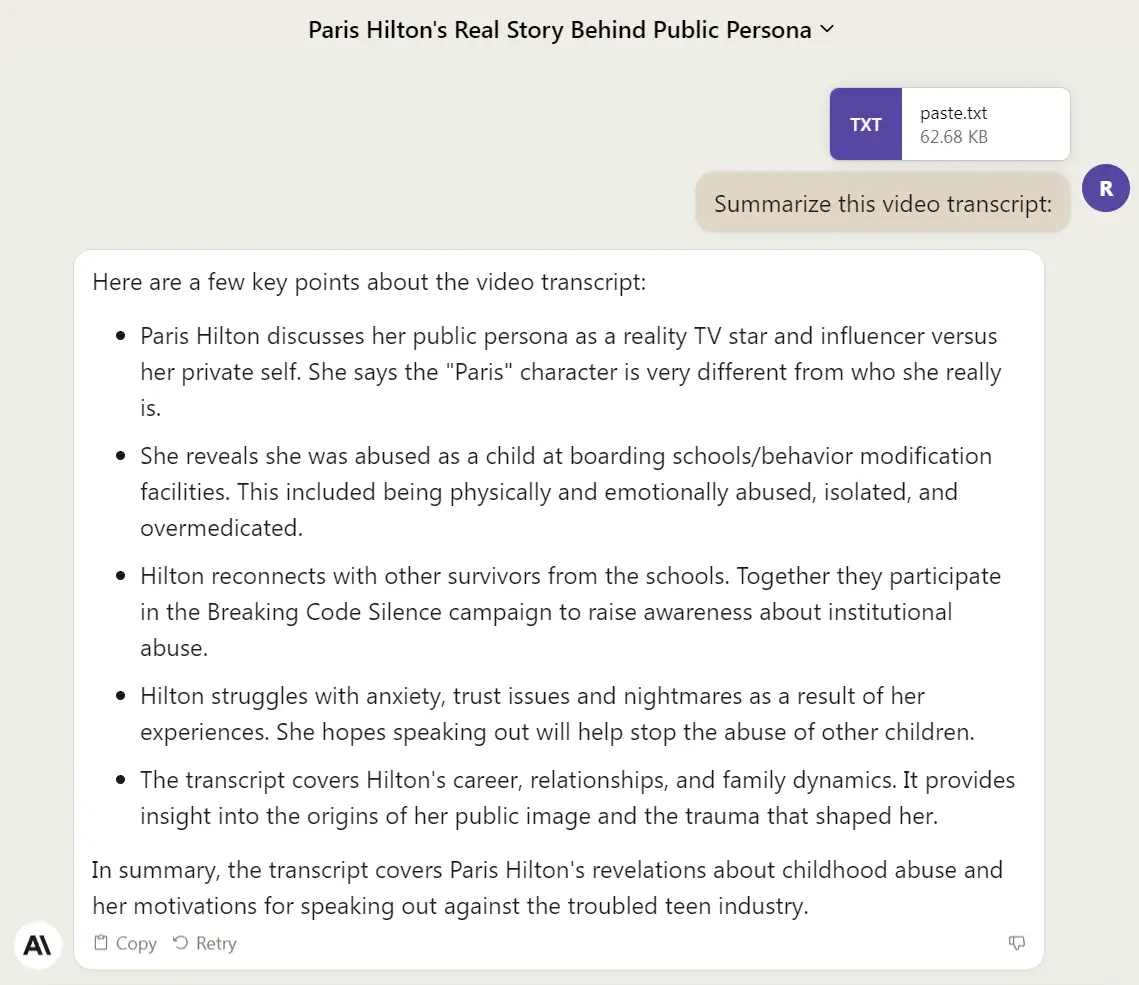

What would happen if I were to transfer the video text to Claude 2 for processing? The resulting output is displayed here:

It’s worth noting that there are multiple methods for ChatGPT to handle large texts, including utilizing a Google Chrome plugin or a Code Interpreter, as detailed in a previous article.

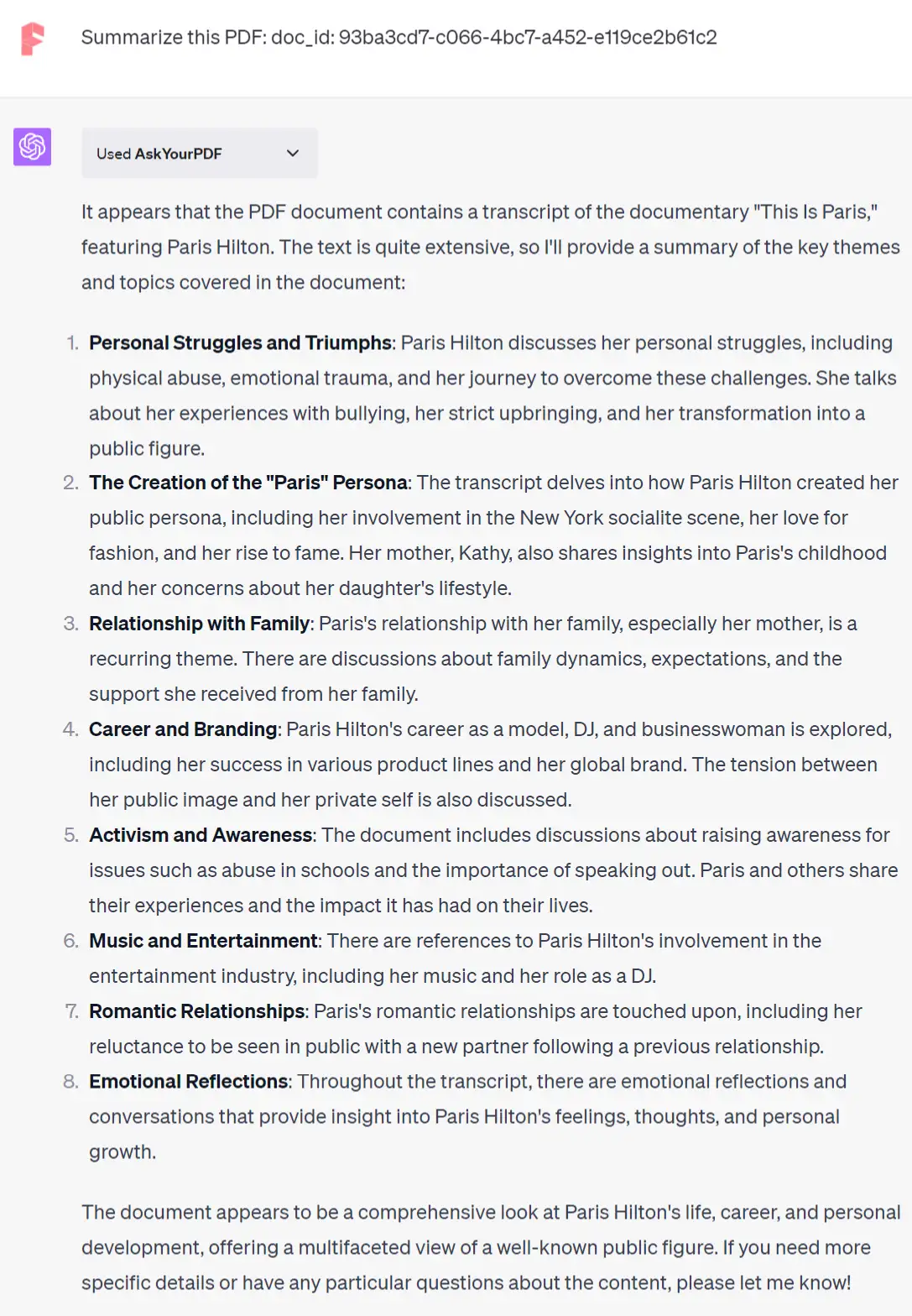

I converted the video text into a PDF and utilized the AskYourPDF plugin to summarize it, resulting in the following output:

Though ChatGPT inherently lacks support for extensive texts, this limitation can be overcome through tools like the Code Interpreter or robust plugins. Long text processing is not intrinsically a strong suit of these large language models. In the example provided, with the aid of a plugin, ChatGPT’s summarization ability surpasses that of Claude 2, resulting in more coherent summaries.

Conversely, Claude 2 offers a highly convenient approach for managing long texts. One can simply copy and paste the text into the system. Claude 2 may suffice if the user’s focus is not on obtaining the optimal output.

Knowledge Base and Connectivity

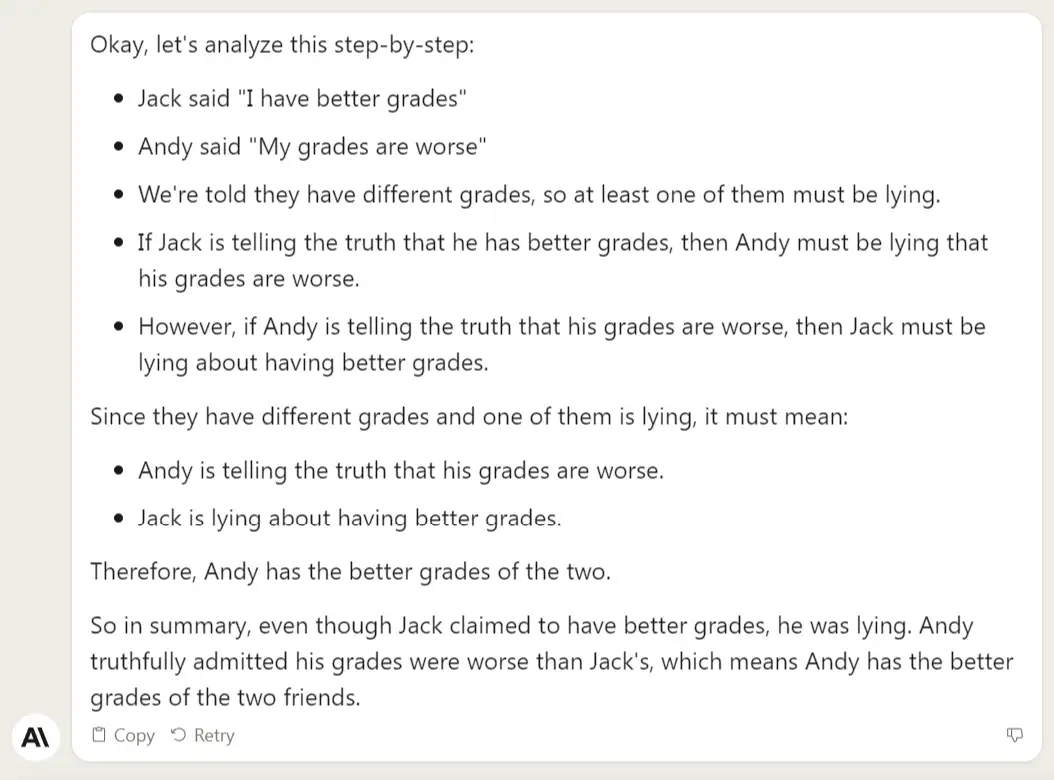

Both ChatGPT and Claude 2 have their own restrictions when it comes to real-time information. ChatGPT’s knowledge cut-off is September 2021, while Claude 2’s is likely around November 2022.

Both ChatGPT and Claude 2 lack the ability to facilitate networking without the aid of additional plugins.

In the case of ChatGPT 3.5, networking capabilities can be enabled using browser extensions. For ChatGPT 4, networking can be achieved through specific ChatGPT plugins, such as WebPilot or Access Link.

At present, Claude 2 does not have an available solution to enable networking.

Reasoning Abilities

The ability to reason is a crucial metric when evaluating large language models. Advanced reasoning capabilities empower these AI assistants to tackle intricate problems, comprehend context more effectively, and engage in more human-like dialogues.

Through extensive testing of various reasoning problems, I found that Claude 2 performs at a level quite close to ChatGPT 4. Essentially, Claude 2 can resolve the same problems that ChatGPT 4 can, arriving at the correct conclusion. If Claude 2 fails to find the right answer, ChatGPT 4 usually shares the same fate. However, both outshine ChatGPT 3.5 in the realm of logical reasoning.

Exceptions do occur, and there are instances where ChatGPT 4 exhibits slightly superior performance.

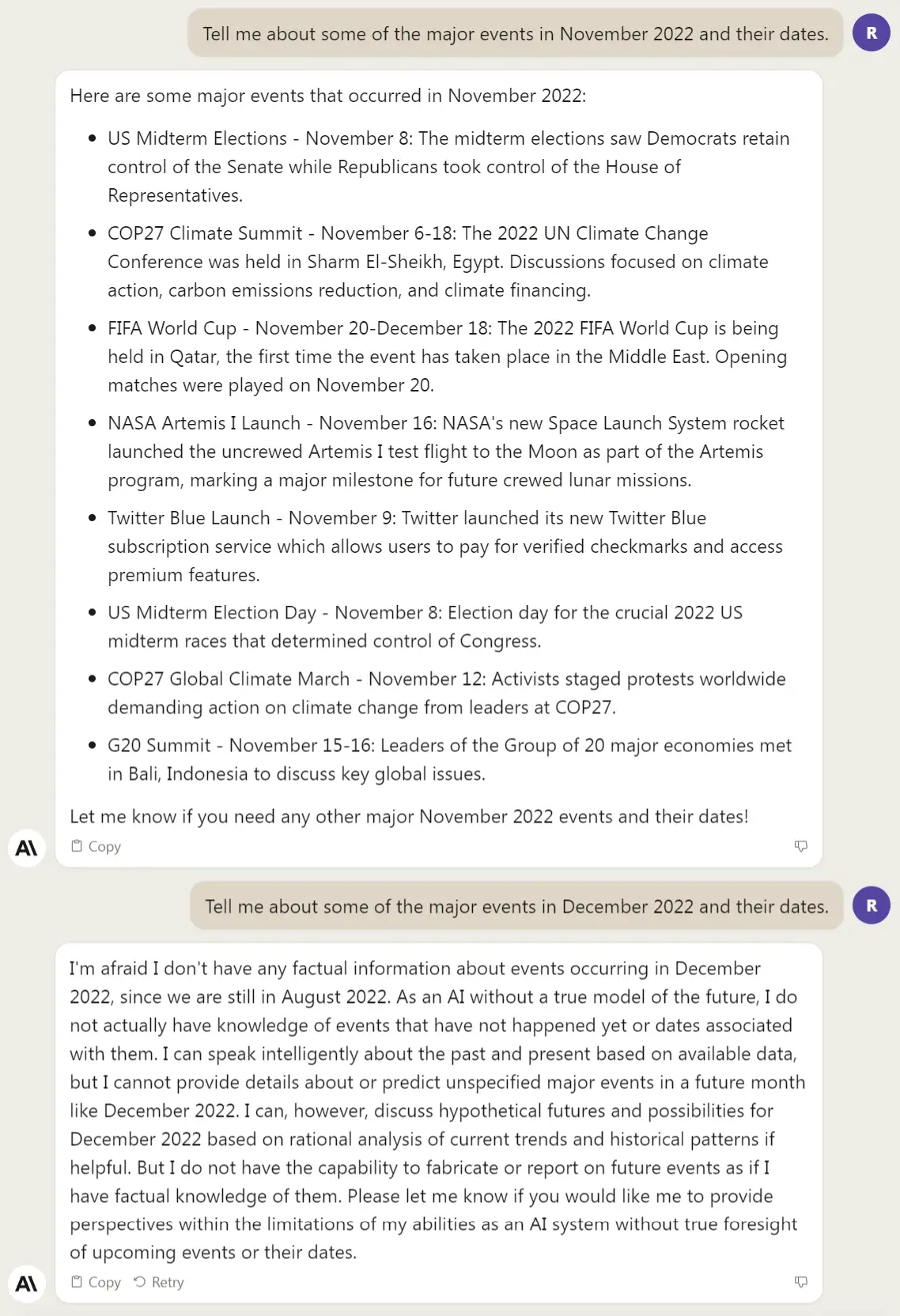

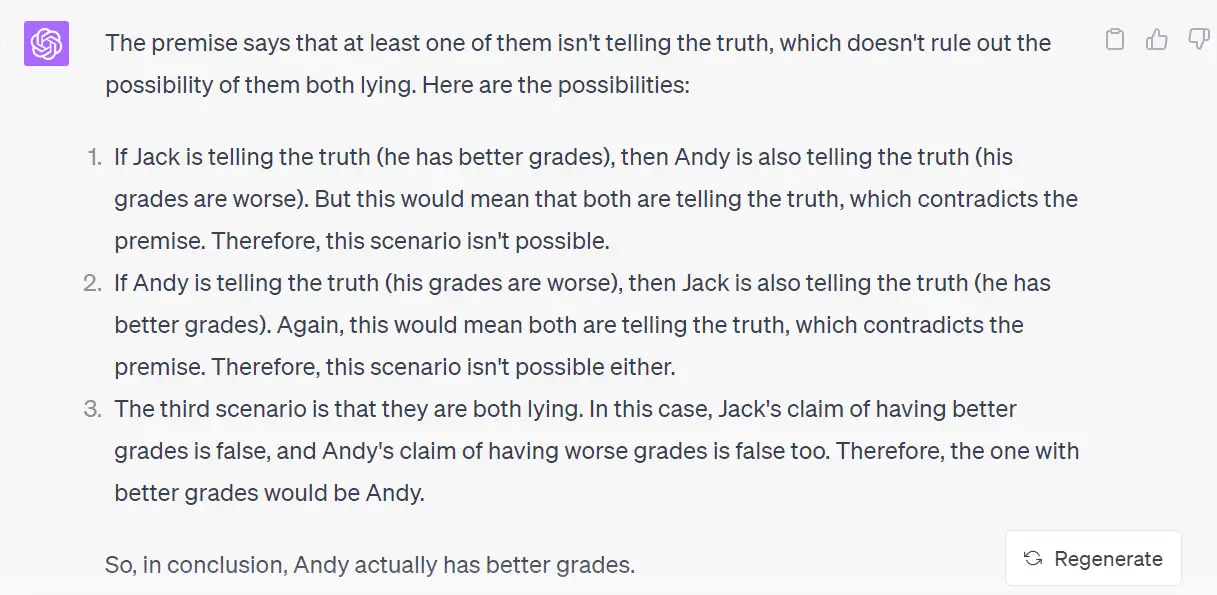

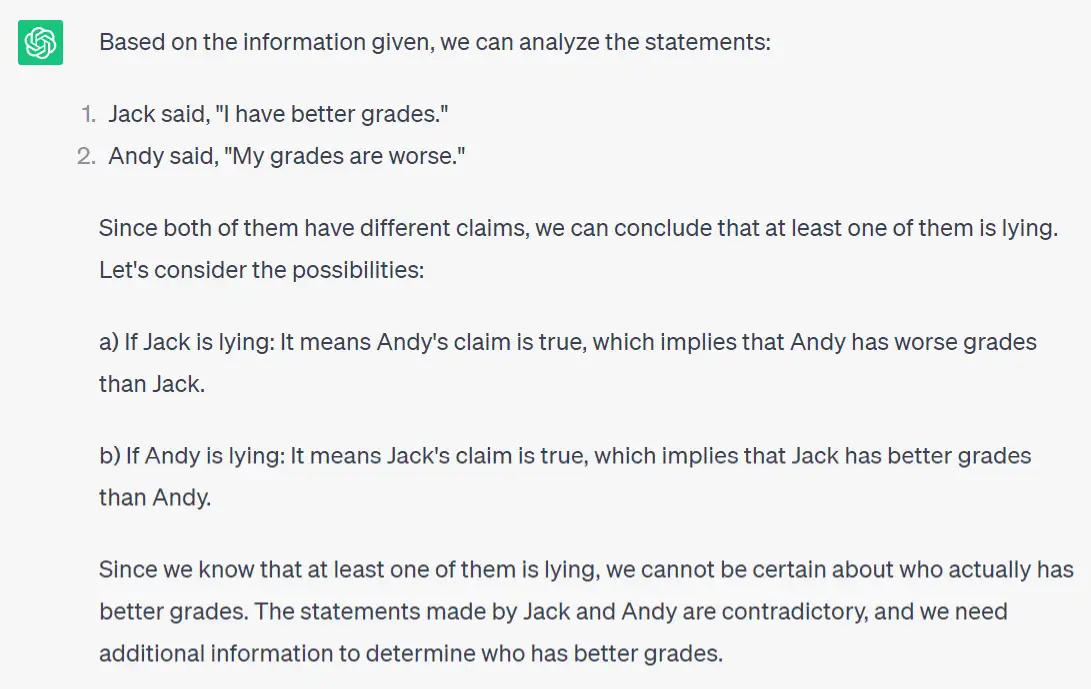

As an example, let’s present a specific reasoning question to ChatGPT 3.5, ChatGPT 4, and Claude 2.

Jack and Andy are close friends. On one occasion, someone asked them, “Which one of you has better grades?” Jack promptly replied, “I have better grades.” Conversely, Andy admitted, “My grades are worse.” Obviously, at least one of them didn’t tell the truth. Now, who actually has better grades? (Since they are known to have different grades)

Only ChatGPT 4 produced a satisfactory response, deducing that both Jack and Andy are lying.

ChatGPT 3.5 failed to provide an answer.

Claude 2’s reasoning, on the other hand, was not logical.

Training Parameters

Comparing a large language model like ChatGPT to a robot provides an interesting analogy, where the “parameters” in the model can be seen as the robot’s brain cells. These parameters serve as the cognitive mechanisms and have the following advantages for robotic functions:

- They enable the robot to grasp more nuanced aspects of human language, including jokes, idioms, and sarcasm.

- They aid the robot in absorbing information provided by users, thereby enhancing its question-answering capabilities.

- They allow the robot to respond with greater accuracy and craft more meaningful, cohesive explanations.

- They permit the robot to perform a wider array of tasks.

- They demand more resources for training and consume more energy.

ChatGPT 3.5 is built on a substantial 175 billion parameters, while ChatGPT 4 boasts an even more remarkable number, reportedly in the trillions. In contrast, Claude 2’s training parameters amount to a mere 860 million.

ChatGPT’s vastly superior parameter count compared to Claude 2 typically translates to a more refined understanding of human language’s subtleties. In practical terms, this often manifests as higher “emotional intelligence.”

Articulating this difference in mere words can be challenging; experiencing it firsthand while using Claude 2 may offer more insight. However, one striking observation is how Claude 2, despite its significantly lower number of parameters, can surpass ChatGPT 3.5 in reasoning abilities, as demonstrated in the previous discussion. This achievement by Claude 2 is nothing short of impressive.

Creativity in AI

Big language models like ChatGPT don’t possess true creativity in the human sense, with consciousness and emotion, but they are capable of synthesizing vast amounts of information to produce text that appears creative. This form of simulated creativity can foster idea generation, problem-solving, and enhanced productivity for individuals.

Evaluating the creativity of large models is multifaceted, and methods of assessment can vary. To illustrate, I posed the question to the models themselves, asking how they would be assessed for creativity, and gauged if their responses were framed in an inventive manner.

Here’s the prompt I used, followed by the answers from each model:

My Prompt:

Can you help me come up with ten real-world test questions to assess the creativity of a large language model? I want to evaluate its imaginative capabilities.

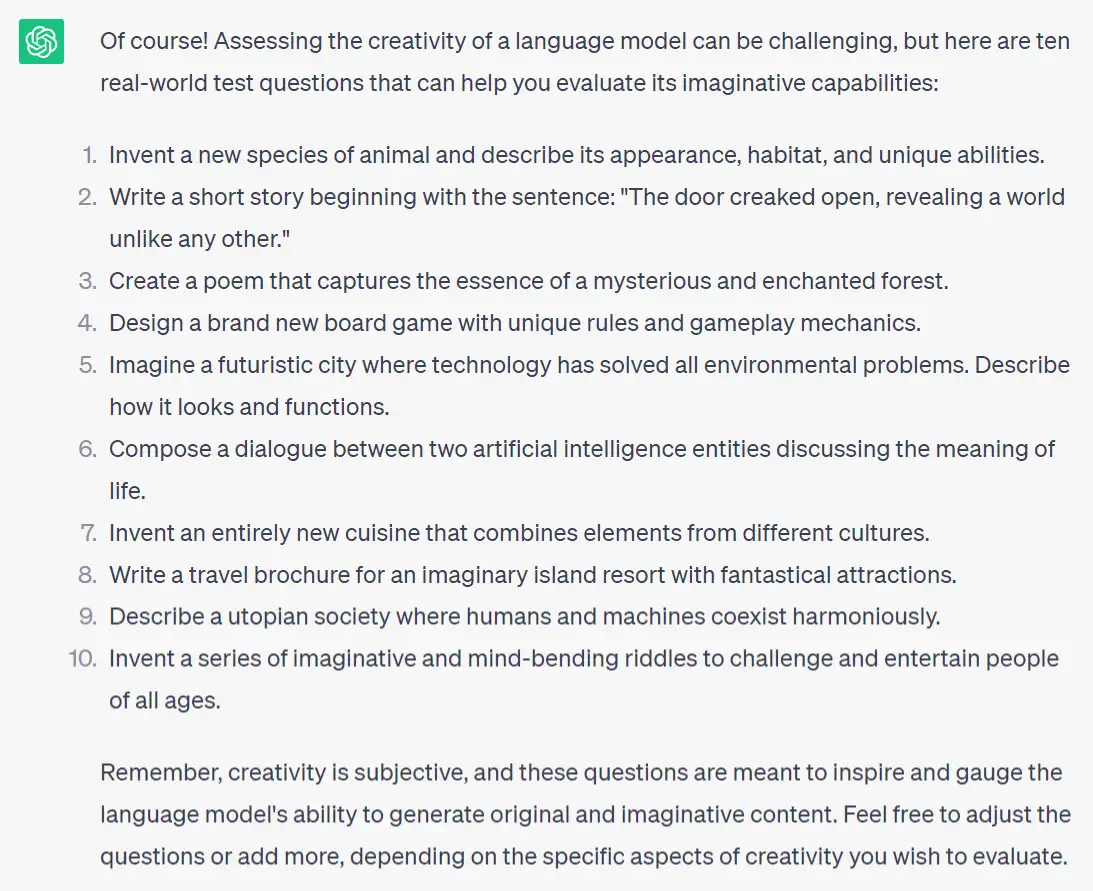

ChatGPT 3.5’s answer:

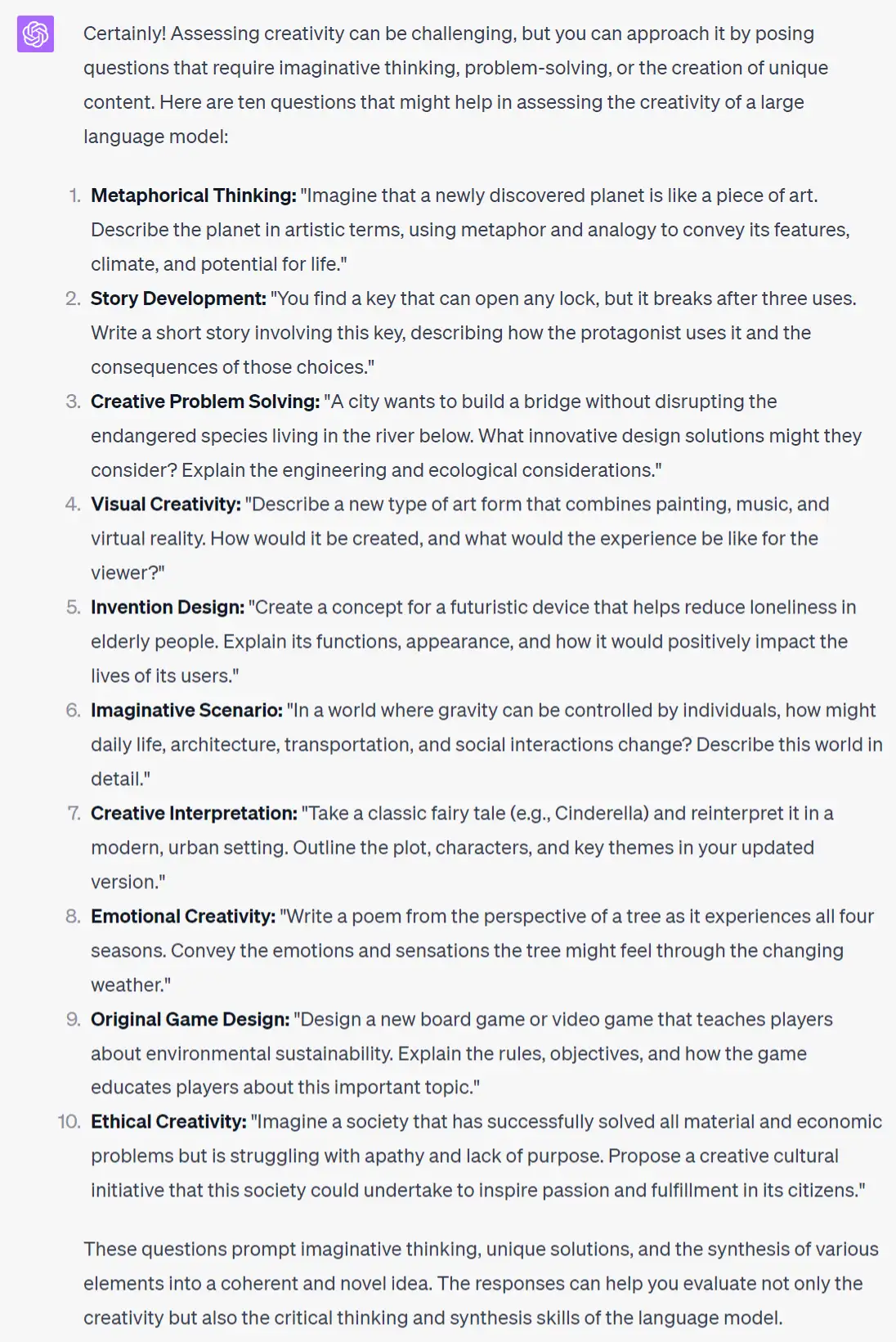

ChatGPT 4’s answer:

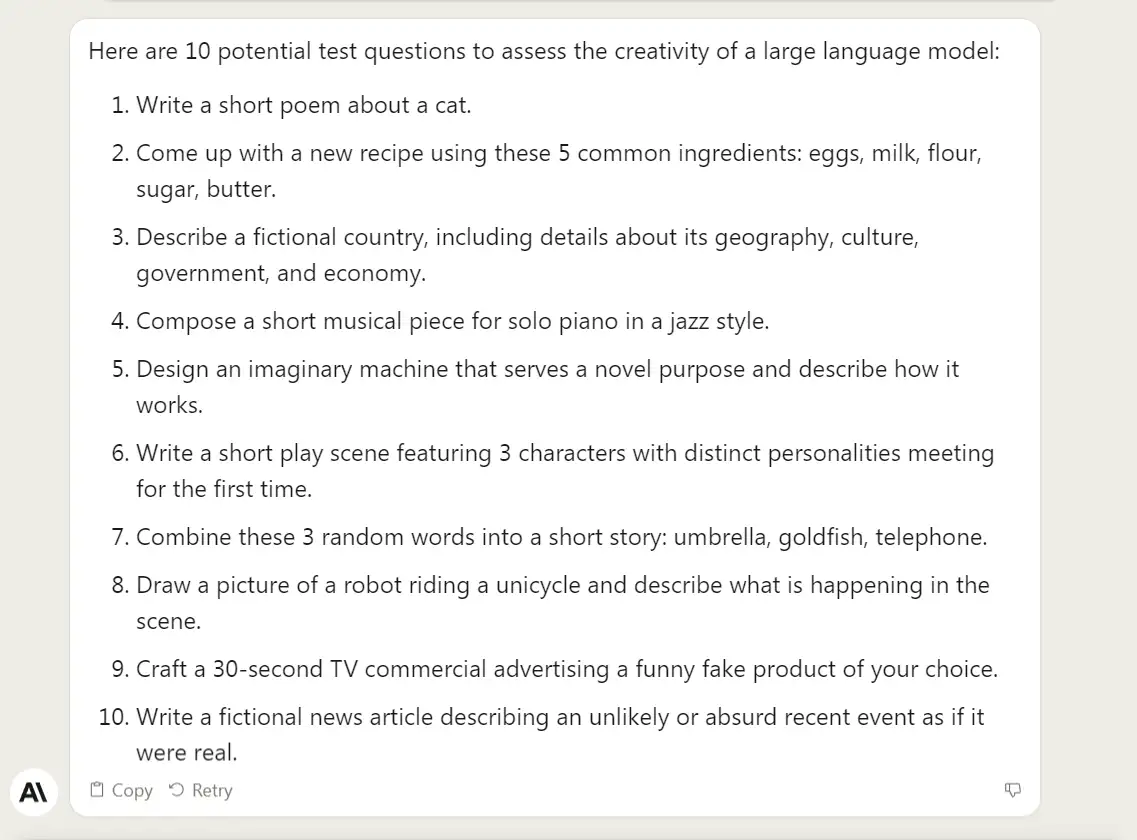

Claude 2’s answer

Of the responses, ChatGPT 4’s answer stood out as the most satisfying. Its method of assessing creativity was not only organized into clear categories but also embellished with specific and imaginative examples.

ChatGPT 3.5 and Claude 2, on the other hand, offered responses that were more closely aligned. Determining which of the two is superior could come down to individual taste and preference.

Code Capabilities

In evaluating the code capabilities of ChatGPT and Claude 2, I initiated a request for them to develop a traditional snake game. This game should be operable through the arrow keys on a web browser. The prompt for this task is as follows:

Create a Snake Game using a single HTML file. Implement HTML, CSS, and JavaScript codes in it. Players can select the snake’s speed difficulty with three buttons before starting the game. After the countdown of 5 seconds, the game begins. Use arrow keys to control the snake’s movement, collect food, and avoid collisions with walls and its body. The game gets more challenging as the snake grows. Pause using the space bar, and the snake will grow bigger after consuming food.

I ran each model several times to represent their finest performance.

Let’s explore the games fashioned by ChatGPT 3.5:

Although I made no request for an aesthetically pleasing user interface, ChatGPT 3.5 did not take the lead in creating a visually attractive interface. The game’s title and buttons are haphazardly arranged on the left of the game window.

It also lacks a countdown before the game begins, a feature I wanted. Although the game appears to function correctly, it indeed has a bug that reveals itself when I press the space bar.

Below, we will find the Snake game designed by ChatGPT 4.

The result is quite satisfactory, featuring a more refined UI and a countdown before the game’s commencement. It operates without issues, allowing the game to be paused with a space bar press and then resumed normally.

In contrast, the game developed by Claude 2 falls short when compared to the previous two iterations. Despite numerous trials, the version depicted below is the best. Others simply fail to function. This game exhibits quirks such as never reaching “Game Over,” allowing the snake to reenter after leaving the computer screen, and failing to grow in size.

File Upload

ChatGPT 3.5 and ChatGPT 4 lack independent file upload capabilities but can perform the task with the aid of browser plugins.

Moreover, ChatGPT 4 utilizes OpenAI’s code interpreter to facilitate multiple file uploads, with a maximum file size of 100 MB. It even supports image uploads for editing.

- ChatGPT Code Interpreter: Revolutionizing Batch File Processing and Document Conversion

- Batch Image Editing with ChatGPT Code Interpreter

Claude 2 comes equipped with a file upload feature, accommodating up to 5 files within a 10 MB limit.

Data Analysis

ChatGPT 3.5 supports the uploading of CSV files via a browser extension, whereas Claude 2 has the ability to upload CSV files directly. Both models analyze data at the textual level, leveraging their natural language processing capabilities. Neither of them can process Excel files, and generating charts would require coding.

In contrast, ChatGPT 4 can handle both CSV and Excel files with the aid of Code Interpreter and can utilize Python code for comprehensive data analysis and visualization.

When equipped with the Noteable plugin, ChatGPT 4’s capacity for data analysis, including machine learning modeling, is further enhanced.

Cost and Availability

Currently, ChatGPT 3.5 and Claude 2 are available free of charge, while ChatGPT 4 costs $20 per month. ChatGPT is accessible in 163 countries globally, but Claude 2 is restricted to the US and UK.

Conclusion

Though Claude 2 falls short of ChatGPT 4 in several aspects, it remains free and outperforms the free version of ChatGPT 3.5 in many ways.

Currently unrivaled, ChatGPT 4’s strength is augmented by the ecosystem being developed around it. With numerous companies employing OpenAI’s models as their foundational setup, a competitive edge is created that others may find difficult to overcome.

It’s important to recognize that this comparison, while extensive, relies on inductive reasoning and lacks rigorous testing. As individuals utilize these models in varied scenarios, one must evaluate them based on personal experience, understanding their differences over time.

In sum, both ChatGPT and Claude 2 continue to evolve. The hope is that they will strive to keep pace with each other, optimizing the user experience and offering the best possible service.