Navigating ChatGPT’s Token Limitations for Extended Use

ChatGPT’s prowess in natural language processing is highly regarded, yet its token limitations often frustrate users. Although alternatives like Claude 2 by Anthropic offer larger token capacities, ChatGPT remains more widely used.

This article aims to guide you through overcoming these token limitations. It offers techniques to upload longer texts and enables ChatGPT to generate outputs as extensive as 10,000 words.

Through practical, real-world examples, we’ll walk you through the process, unlocking more of what ChatGPT has to offer.

What Is the Token Limit in ChatGPT?

ChatGPT measures text length differently from how humans do. Instead of counting characters or words, ChatGPT uses a system of tokens to segment a sentence into smaller parts.

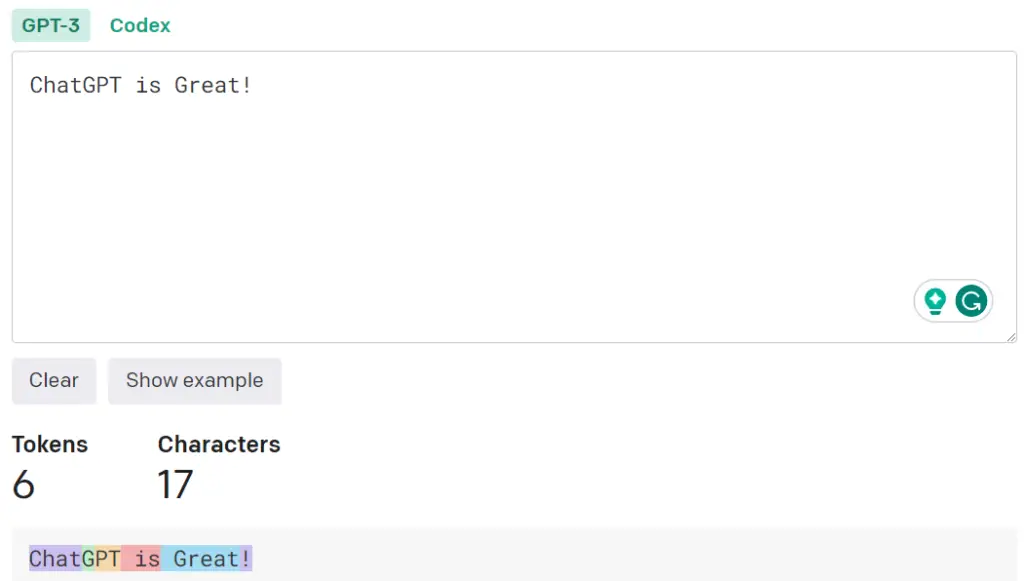

A token can be a single word, subword, or even a character, based on the method of tokenization. To illustrate, the sentence “ChatGPT is great!” could be divided into 6 tokens like [“Chat”, “G”, “PT”, ” is”, ” great”, “!”].

To translate tokens into characters, you can use OpenAI’s Tokenizer OpenAI Tokenizer. Alternatively, you can rely on an approximate formula:

- 1 Token is roughly equal to 4 English characters

- 1 Token is approximately ¾ of an English word

- 100 Tokens equate to about 75 English words

It’s important to note that token limits encompass both input and output in a conversation. For instance, ChatGPT 3.5 has a token limit of 4096, while ChatGPT 4 has a higher limit of 8192 tokens.

The token limit exists primarily for technical reasons, such as memory constraints and computational efficiency. GPUs or TPUs, the hardware that runs models like ChatGPT, have finite physical memory. Exceeding the token limit in a conversation would either make the operation fail or significantly slow it down.

Additionally, as the number of tokens increases, the computational load rises steeply, negatively affecting response time. That is why GPT-4 is less responsive than GPT-3.5.

However, there are situations where lengthy submissions to ChatGPT are necessary. For example, you might want ChatGPT to summarize an entire book or generate a long piece of content.

How to Submit Text Exceeding ChatGPT’s Token Limit

If you have text to submit that surpasses the token limit, you have several options:

- You could switch to a model with a higher token limit in OpenAI’s Playground, such as “gpt-4.5-turbo-16k” or “gpt-4-32k”. However, this option incurs additional costs and leaves the ChatGPT interface, so it won’t be covered here.

- You can compose a prompt indicating that your submission will be in multiple parts. For example, you could say, “The text I am submitting is divided into several segments. Please wait until all parts are provided before summarizing or responding to any questions.” Once all parts are uploaded, you can proceed with asking ChatGPT questions or assigning tasks.

- Consider using the “ChatGPT File Uploader Extended” plugin for Google Chrome. This tool automatically segments your long text for submission to ChatGPT.

- Alternatively, you can convert your text into a PDF and upload it using AskYourPDF.

- Another option is to place your text in a notepad file and upload it through ChatGPT’s Code Interpreter.

For more specific guidelines, refer to my previous post.

Strategies to Extend ChatGPT’s Output Beyond Token Limits

If you need ChatGPT to produce an output that goes beyond its token limit, you have several approaches:

- Occasionally, a “Continue” button may appear when ChatGPT’s response hits the token limit. However, this feature is not consistent and may occur randomly.

- If a response is truncated due to the token limit, simply typing “Continue” can prompt ChatGPT to resume and complete the answer.

- If your requirement involves generating text that surpasses the token limit, it’s advisable to specify the text length in terms of words or characters. For instance, you could request, “Write a 10,000-word essay on generative AI.”

- Should you request a word count that exceeds the token limit, ChatGPT is likely to inform you that the content will be segmented into parts and output sequentially. In such cases, continue to prompt ChatGPT to produce more text.

- Alternatively, you can ask ChatGPT to generate an outline or produce different sections based on an outline you provide.

For real-world examples of these strategies in action, please refer to the following section.

Practical Example: Converting a YouTube Lecture into Detailed Notes

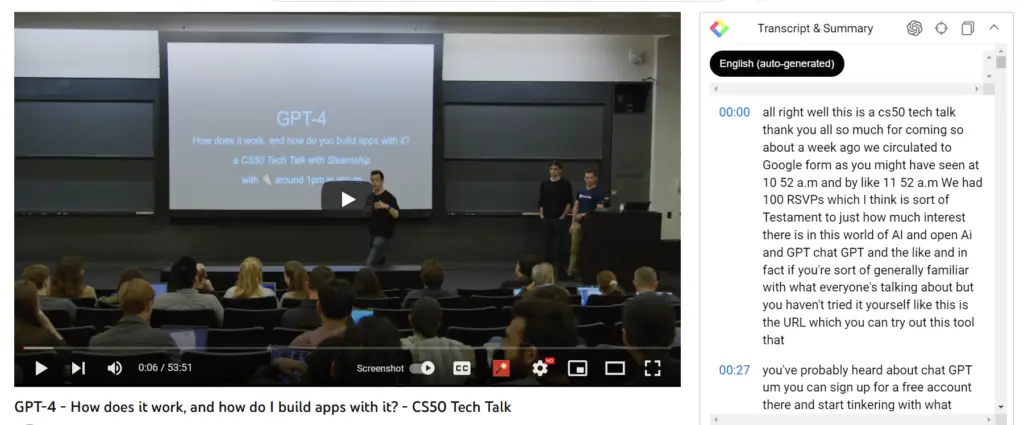

Let’s say I have a task: There’s a 55-minute lecture on GPT-4 by Harvard University available on YouTube. The video’s subtitled text is over 10,000 words long, and I want to condense it into a 10,000-word set of notes. Though a 10,000-word lecture may not necessarily yield 10,000 words of notes, the goal is to produce detailed notes and allow ChatGPT to refine them. Here’s how to go about it:

1/ Subtitle Extraction: First, I utilized a Google Chrome plugin called YouTube Summarizer with ChatGPT to copy the video’s subtitle text into a text document. This plugin can also summarize YouTube videos; for more details on how to use it, see the linked information. 👉 How to Summarize Youtube Video Content with ChatGPT or Claude

2/ Text Upload: I then activated another Google Chrome plugin, “ChatGPT File Uploader Extended,” which I mentioned earlier. I used this plugin to break the lengthy text document into smaller segments for submission to ChatGPT.

3/ Task Assignment: After all parts were uploaded, the plugin displayed a completion message. At this point, I was ready to assign tasks to ChatGPT.

I have finished reviewing the document and I’m ready to assist you with your inquiries.

4/ Prompt Creation: I crafted a specific prompt for ChatGPT, asking it to turn the text into detailed notes.

I’d like to convert all your previous responses (the YouTube transcript) into a comprehensive Obsidian note with a target word count of approximately 10,000 words. The aim is to capture every nuance and detail from the original transcript. Here are the specific steps and guidelines:

Preliminary Outline: Before diving into the conversion, please create an outline that breaks down the transcript into sections. Indicate the estimated word count for each section to ensure we reach the overall target of 10,000 words.

Detail Retention: As you convert the transcript, make sure to retain all the details. This includes not just the main points but also any anecdotes, examples, or specific phrases.

Clarification: If you encounter any ambiguous or unclear segments in the transcript, use your own knowledge to clarify and elaborate on those points.

Token Limit: I understand that there’s a token limit for each interaction. Feel free to use multiple interactions to complete the task. Just make sure to maintain continuity and coherence across interactions.

Pause and Reflect: Before finalizing each section, take a moment to review and ensure that it aligns well with the original transcript and fills in any gaps effectively.

Please proceed with the task once you’ve read and understood these guidelines.

5/ ChatGPT’s Steps: ChatGPT outlined the next steps it would take to fulfill the task.

Acknowledged! To begin the process of converting the YouTube transcript into a comprehensive Obsidian note with a target word count of approximately 10,000 words, I’ll follow the steps and guidelines you’ve provided. I’ll create a preliminary outline, retain all the details from the original transcript, clarify any ambiguous points, and make sure each section aligns with the original content. I’ll also manage the token limit by using multiple interactions as needed to maintain continuity. Let’s start by creating the preliminary outline based on the sections provided in the transcript. Shall we proceed?

6/ Continuation: I prompted ChatGPT to continue, after which it provided an outline for the note-taking process.

- Introduction (Word Count: 400)

- Introduction to the talk about language models and GPT-4.

- Mention of OpenAI’s mission and the goals of the presentation.

- Language Models and GPT-4 (Word Count: 800)

- Explanation of what language models are and their capabilities.

- Introduction to GPT-4 (Generative Pre-trained Transformer 3).

- Mention of GPT-4’s large-scale training and data sources.

- Discussion about GPT-4’s architecture and prompt-based generation.

- The Writing Atlas Project (Word Count: 750)

- Introduction to the Writing Atlas project and its purpose.

- Explanation of the idea of a “knowledge cutoff” in language models.

- Use of GPT-4 to generate short stories and writing prompts.

- Building AI-Generated Utility Apps (Word Count: 1300)

- Discussion about building AI-generated utility apps.

- Explanation of the process of creating useful applications.

- Mention of the concept of “prompt engineering” to guide GPT-4.

- Introduction to “template looping” to improve output quality.

- Handling Hallucinations and Improving Accuracy (Word Count: 1200)

- Explanation of the hallucination problem in GPT-4.

- Discussion of strategies to reduce hallucinations and improve accuracy.

- Mention of the use of examples and fine-tuning to mitigate issues.

- Explanation of using prompts to guide GPT-4’s responses.

- Applications and Business Value (Word Count: 1100)

- Explanation of the applications of GPT-4 in various domains.

- Discussion about the value of GPT-4 in startups and companies.

- Introduction to different models of utilizing GPT-4, including private versions.

- Privacy and IP Considerations (Word Count: 900)

- Explanation of privacy implications related to prompts.

- Discussion of different deployment options: SAS, private VPC, and open source.

- Mention of OpenAI’s privacy policy update regarding prompts.

- GPT-4’s Role in Future Computing (Word Count: 900)

- Explanation of GPT-4’s role in future computing.

- Discussion about the concept of GPT-4 as a “second processor.”

- Introduction to the idea of incorporating GPT-4 into various applications.

- Conclusion (Word Count: 400)

- Recap of key points discussed throughout the presentation.

- Mention of the potential impact and trajectory of language models.

Total Estimated Word Count: 9,750

7/ Note Completion: Following the outline, I instructed ChatGPT to finalize the notes, section by section, adhering to a predetermined word count. While it didn’t hit the 9,750-word mark, the resulting notes were quite detailed.

This approach demonstrates how to effectively leverage ChatGPT and supplementary tools to handle extensive content, even when faced with token limitations.

Conclusion

Navigating the token limitations of ChatGPT may initially seem like a hurdle, especially for those looking to utilize its capabilities for extensive content. However, as we’ve explored, there are various strategies and tools available to effectively manage this limitation.

From smart text segmentation to specialized prompts and even leveraging browser plugins, there are ways to fully tap into ChatGPT’s robust natural language processing features.

Whether you’re summarizing a lengthy academic lecture or generating a detailed paper, these techniques offer a roadmap to unlocking ChatGPT’s full potential. By understanding and adapting to these constraints, you can make the most of what this powerful language model has to offer.